Test documentation tips and best practices in software engineering

In the software development lifecycle, testing is a pivotal phase. While it ensures that software products are free of defects, how testing is conducted—and documented—plays a vital role in the project’s success.

Proper test documentation aids in creating a systematic and repeatable testing process, fostering better communication within the team and providing a foundation for future projects.

As we delve deeper into this topic, you’ll learn about the importance of test case documentation, grasp actionable tips on structuring and formatting test documentation, and discover the secrets to maintaining these crucial documents.

By the end, the goal is to elevate test documentation from a mundane task to an art form, giving it the importance it deserves.

Why spend time on test case documentation?

Developers are in a rush and often want to avoid being bothered by documentation, let alone test case documentation. Development teams often spend little time writing test case documentation and documenting test case structure or testing processes.

So, why would you spend time writing test case documentation? You should pursue test case documentation as an engineering team for three main reasons:

Knowledge sharing

Firstly, knowledge sharing has become a pillar within the engineering community. This argument is especially valid for test case documentation.

If a developer needs to learn about a product area they don’t know much about, and whether they are a senior developer or a newly onboarded team member, test documentation can get someone up to speed quickly.

Test case documentation is not just a series of quality checks. It acts as a curated collection of functional insights. For any developer, test case docs show a feature’s expected behavior, edge cases, and potential pitfalls. In short, it provides a holistic view of how a particular product segment is supposed to work.

Consistency

Next, the quality of test documentation directly impacts testing outcomes. In other words, low-quality test documentation can lead to unreliable test results and decreased trust in the software they’re creating. It poses a significant challenge when the goal is to deliver robust and high-quality software.

To combat this issue, it’s important to have consistent test documentation. Consistency is essential for any development team because it ensures test cases are executed the same way each time. This promotes trust in the test cases and overall software.

Feedback and continuous improvement

Lastly, test case documentation serves as a guide for writing future tests and a robust feedback mechanism for software engineering teams. They are a valuable tool during retrospective meetings, offering insights into testing gaps and finding areas for improvement.

Consider a scenario where your latest release included multiple hotfixes for bugs that slipped through your testing suite. Your test documentation can tell you how you approached testing these software paths and why specific bugs haven’t been caught.

To sum up, test case documentation allows you to review and improve your testing efforts continuously.

How to structure test documentation

Adequate test documentation should begin with stating the objective of the test. It’s the first thing a developer reads when scanning through test cases, which gives them much information about the expected behavior of a product feature.

Next, outline the prerequisites or initial conditions that need to be met before the test is performed. This could include software versions, configurations, or any setup requirements. After this, write down the steps to execute a particular test. Make sure to provide clear and straightforward steps in sequential order.

Further, include the expected outcome so a developer or tester can verify the output. For long or complex test cases, including intermediary outcomes is beneficial to help developers verify if they are on the right track executing a set of tests.

Remember to detail clean-up steps for when the tests are completed. In some scenarios, cleaning up a database, environment, or virtual machine is essential to ensure it’s ready for future test runs.

```text

### Test Case ID: TC-001

**Test Case Summary:** Verify user login with valid credentials

**Test Steps:**

1. Navigate to the login page.

2. Enter valid username.

3. Enter valid password.

4. Click the "Login" button.

**Expected Outcome:** User is successfully logged in and is redirected to the dashboard.

```It’s important to understand that you should not always write down all of this information. For instance, the JavaScript community has adopted the AAA approach to clarify test cases for backend unit tests.

The AAA approach consists of three parts that aid in clarifying the test case, mostly implicitly.

- Arrange: Includes all the setup code to prepare your scenario. For instance, it includes mocking/stubbing, adding database records, loading test data, or any other preparation code.

- Act: Usually a short section that executes code to verify a particular code path or feature.

- Assert: Verifies if the test outcome matches the expected outcome. The expected test outcome implicitly describes what a developer expects to see when executing this test.

Beyond that, the AAA approach expects you to clearly write down each test case’s goal and expected outcome when defining a test.

Here’s an example of a test that verifies if a user becomes a premium user if they spend more than $500. Note that the `test` statement describes both the scenario and expected outcome, making it easy for developers to learn about a feature by reading all test cases.

```js

describe("Customer classifier", () => {

test("When customer spent more than 500$, should be classified as premium", () => {

//Arrange

const customerToClassify = { spent: 505, joined: new Date(), id: 1 };

const DBStub = sinon.stub(dataAccess, "getCustomer").reply({ id: 1, classification: "regular" });

//Act

const receivedClassification = customerClassifier.classifyCustomer(customerToClassify);

//Assert

expect(receivedClassification).toMatch("premium");

});

});

```To summarize, be as descriptive as possible and use easy-to-understand data and function names to make it obvious what you are trying to verify.

How to maintain test documentation

Maintaining your test documentation is as crucial as creating it. Software evolves continuously, and so does your test documentation.

Let’s consider that you’ve added a new database to your product. In this case, you’ll need to update your test documentation to ensure consistent testing results because it’s unaware of this new database.

Here are some best practices to keep your testing docs up to date:

Processes

Define cycles when to review your test documentation. For instance, you can check your test documentation after each sprint or software release.

Further, define a clear process for how to involve stakeholders in reviewing test cases. Stakeholders have a lot of business knowledge and can share feedback about missed test case scenarios.

Ownership

It’s crucial to define clear roles and responsibilities for who creates, reviews, and updates test cases. For many organizations, it’s sufficient to identify a “test documentation champion” who manages test cases, oversees improvements, and involves stakeholders.

For larger codebases, having multiple test documentation champions for different areas of your product might be beneficial.

Tools

Tools can assist you in managing your test documentation. Starting with the basics, a version control system like Git can help you track changes against your test documentation.

Beyond Git, you want to look into automation frameworks that can help you with testing. For instance, Selenium, a popular testing tool, automatically generates test reports.

You can also use CI/CD pipelines to ensure the product and test documentation are in sync.

Conclusion

It’s vital to keep test documentation up-to-date. It guides all testing activities, helping everyone on the team understand the goals, approaches, and outcomes of test cases. When test documentation isn’t kept up-to-date, you might face misunderstandings, mistakes, and gaps in our testing processes.

It’s important to remember that test documentation is part of the software development lifecycle. Outdated test documentation will negatively impact the trust in your tests and project quality.

In short, when you develop software, prioritize test documentation. It’s an investment that pays significant dividends in software quality, team efficiency, and overall project success.

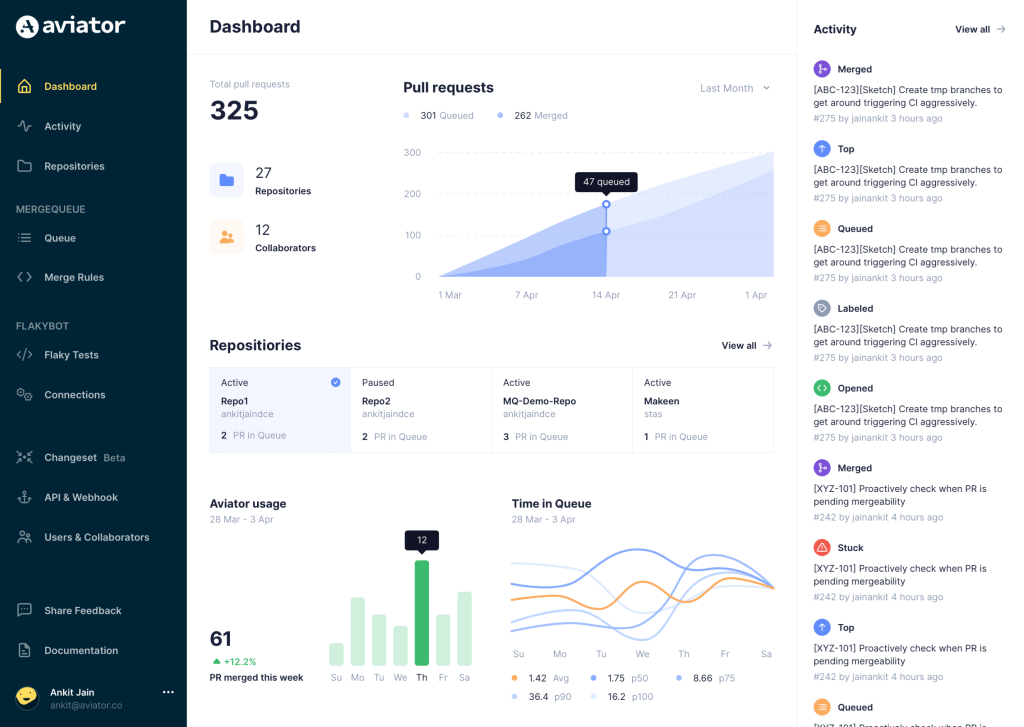

Aviator: Automate your cumbersome processes

Aviator automates tedious developer workflows by managing git Pull Requests (PRs) and continuous integration test (CI) runs to help your team avoid broken builds, streamline cumbersome merge processes, manage cross-PR dependencies, and handle flaky tests while maintaining their security compliance.

There are 4 key components to Aviator:

- MergeQueue – an automated queue that manages the merging workflow for your GitHub repository to help protect important branches from broken builds. The Aviator bot uses GitHub Labels to identify Pull Requests (PRs) that are ready to be merged, validates CI checks, processes semantic conflicts, and merges the PRs automatically.

- ChangeSets – workflows to synchronize validating and merging multiple PRs within the same repository or multiple repositories. Useful when your team often sees groups of related PRs that need to be merged together, or otherwise treated as a single broader unit of change.

- TestDeck – a tool to automatically detect, take action on, and process results from flaky tests in your CI infrastructure.

- Stacked PRs CLI – a command line tool that helps developers manage cross-PR dependencies. This tool also automates syncing and merging of stacked PRs. Useful when your team wants to promote a culture of smaller, incremental PRs instead of large changes, or when your workflows involve keeping multiple, dependent PRs in sync.