Everything wrong with DORA Metrics

DORA is an industry-standard metrics set for measuring and comparing DevOps performance. The framework was developed by the DevOps Research and Assessment (DORA) team, a Google Cloud-led initiative that promotes good DevOps practices.

The five DORA metrics are Deployment Frequency, Lead Time for Changes, Change Failure Rate, Time to Restore Service, and Reliability. By optimizing these areas, you can improve your software throughput while preserving quality.

These metrics aren’t a definitive route to DevOps success though. Here’s why DORA can make developers uncomfortable.

DORA metrics explained

First, let’s recap the five metrics and why they matter:

- Deployment Frequency – This is the number of deployments you make to production in a given time period. A high deployment frequency is usually desirable. It indicates you’re shipping changes regularly, as small pieces you can iterate upon.

- Lead Time for Changes – The lead time is defined as the time between a commit being made, and that commit making it into production. It measures the length of your code review period. Too high a lead time can suggest there’s friction in your delivery pipeline.

- Change Failure Rate – The proportion of deployments to production that cause an incident to occur. You want this metric to remain as low as possible.

- Time to Restore Service – Some failures are inevitable. When an incident does begin, this metric measures the time required to resolve it.

- Reliability – This fifth metric is often overlooked as it was added after the original DORA announcement. This measures your ability to meet your reliability objectives such as SLAs, performance targets, and error budgets.

At face value, the metrics capture the essence of DevOps: rapidly deploying software, while minimizing the risk of failure. But their use can be problematic because data alone doesn’t explain why a trend’s occurring.

Problem 1: Lack of context

DORA doesn’t reveal why your deployment frequency is low, or change lead time is increasing. It could be due to an issue you can solve, such as too much friction during code review, or an unrelated reason that you can’t avoid.

Team sickness, slow starts during the ramp-up to a feature sprint, and holiday periods can all impact deployment frequency, for example, while change lead time can increase because a required code owner is away. It’s unreasonable to expect these slowdowns to disappear, but they’ll still impact your apparent DORA performance.

You should contextualize each metric by setting it against your organization’s working practices and product objectives. Set realistic targets for your product type, scale, and number of team members involved. A daily deployment frequency often makes sense for web apps, for example, but may not suit native mobile solutions that need to be distributed to customers through app stores.

The raw numbers in your DORA metrics are not useful for long-term analysis. It’s best to look at the trends in the data to gauge improvements over time. Once you’ve established a baseline for each team, you can begin a more meaningful analysis of any deviations from it. Otherwise, developers can be blamed for discrepancies that aren’t actually a problem.

Problem 2: Changing metrics creates uncertainty

DORA is just data. It doesn’t tell you what to do when the metrics change. This causes uncertainty because developers aren’t informed of what happens when the change failure rate increases or deployment frequency slows down.

DORA’s meant to guide you towards better DevOps. This only happens if you act upon what your metrics are telling you. Because the framework doesn’t provide answers to what happens in response to changes, you need to set clear internal expectations instead.

To be successful, you should integrate DORA into your engineering and SRE workflows. Make SRE decisions based on DORA trends, such as directing engineers to focus on bug fixes instead of new features, after the change failure rate meets a certain threshold. This integration rarely happens though, causing developers to be penalized for worsening metrics, even when they have no opportunity to resolve them.

Problem 3: You can optimize forever

There’s a flip side to diligently acting on DORA metrics: the framework won’t tell you what not to do, either. Teams can get stuck constantly trying to optimize their metrics, long past the point of diminishing returns. You don’t need to keep making adjustments after your performance meets your organization’s targets.

Comparing your team to similar peers can help identify whether you’re already a high performer. Industry reports such as Google Cloud’s annual State of DevOps whitepaper also provide data for benchmarking yourself against others.

When you’re performing well, trying to make further improvements is unlikely to help you deliver more software to customers. Instead, you should pivot towards maintaining your performance at its current level. This is best done iteratively, by regularly reviewing performance, looking for any sticking points, and implementing targeted adjustments in your workflows.

Problem 4: Team comparison doesn’t work in practice

One of DORA’s roles is enabling cross-industry comparison of DevOps practices, between different teams and even different organizations. In practice, this is challenging to achieve because every team works differently and will have its own success criteria.

DevOps is a set of principles, not a specific collection of tools or concrete processes. DORA attempts to distill the universal objectives behind the principles, but implementations can still vary considerably in each team.

This ties to contextualizing your use of each metric. If your website developers have a higher deployment frequency than your mobile engineers, it’s important to remember the different operating environments of the teams you’re comparing. A lower deployment frequency may be expected and desirable for developers building native apps, but this won’t be automatically visible in straight-line comparisons. Developers end up feeling pressured to meet unobtainable targets set by neighboring teams.

Problem 5: DORA doesn’t fit every team

DORA’s a great starting point for analyzing DevOps performance but it doesn’t suit every team. Certain assumptions are built into the framework which aren’t relevant to all developers.

DORA works best when you intend to deploy frequently, rapidly iterate on small changes, and continually seek improvements to lead time, failure rate, and time to restore service. While these are arguably best practices for everyone in the software industry, some DevOps teams still require a longer cadence.

If you ship software monthly, your deployment frequency will always look low, for example. Similarly, the apparent lead time of new commits will be variable, with some commits getting deployed quickly (if they’re made near the end of the month), while others appear to wait for weeks. Your change failure rate will be less meaningful too, as fewer distinct changes will be going out to production.

In this situation, DORA can raise more questions than it resolves. Your metrics will appear low compared to industry peers, but you’ll still be shipping work on time, satisfying customers, and keeping developer productivity aligned with expectations. It’s more useful to adapt DORA to include alternative metrics such as the number of tickets closed and the time taken to merge (instead of deploy) commits. These can be more accurate performance indicators when you’ve adopted an extended release cycle.

Problem 6: Metrics don’t reflect the nature of your success

On a similar theme, it’s important to link trends in DORA metrics back to the product work that’s caused them. DevOps success is ultimately about delivering value to customers, instead of merely creating impressive numbers on your DORA charts.

DORA metrics are susceptible to manipulation by meaningless development activities. If you frequently deploy a large number of minor changes, you’ll appear to have “good” DORA metrics (a high deployment frequency, with a low change lead time), but you won’t be providing any extra value to your users. Conversely, you might seem to be performing poorly until you look more closely and realize developers were occupied on a big new feature sprint. This may have since brought in hundreds of new customers.

Use DORA alongside other indicators of success, such as the trends in your business objectives. This ensures developer input will be recognized, even during crunch times when metrics may temporarily suffer, but product value is significantly increased. DORA should usually be secondary to your organization’s KPIs, as its user counts, transaction volume, and raised support tickets that provide the clearest indication of whether developer activities are paying off.

Being pressured to continually keep improving is a demoralizing source of anxiety for developers. If the pressure’s sustained for long enough, without any successes being recognized, it could cause burnout and higher staff turnover.

Conclusion

DORA metrics measure DevOps success by looking at five key indicators common to high-performing teams. Effective DevOps implementations should accelerate software delivery without compromising quality, but it’s often unclear whether you’re actually achieving this objective. DORA metrics such as Deployment Frequency and Change Failure Rate provide tangible data to answer the question.DORA doesn’t always provide the complete picture, though. Concentrating solely on DORA metrics often gives the wrong impression because they can’t explain why values change or fully unravel differences between teams. You should consider DORA metrics in the context of your product and business aims. If you’re gaining users without facing incidents, you’re probably succeeding at software delivery, even if DORA suggests otherwise for your team.

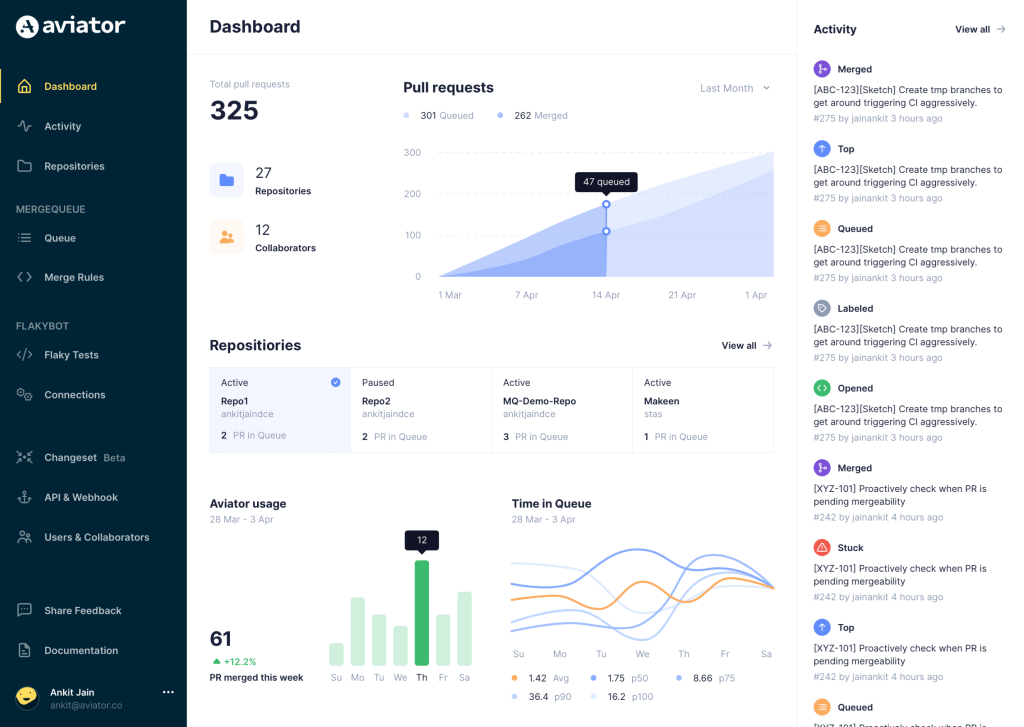

Aviator: Automate your cumbersome merge processes

Aviator automates tedious developer workflows by managing git Pull Requests (PRs) and continuous integration test (CI) runs to help your team avoid broken builds, streamline cumbersome merge processes, manage cross-PR dependencies, and handle flaky tests while maintaining their security compliance.

There are 4 key components to Aviator:

- MergeQueue – an automated queue that manages the merging workflow for your GitHub repository to help protect important branches from broken builds. The Aviator bot uses GitHub Labels to identify Pull Requests (PRs) that are ready to be merged, validates CI checks, processes semantic conflicts, and merges the PRs automatically.

- ChangeSets – workflows to synchronize validating and merging multiple PRs within the same repository or multiple repositories. Useful when your team often sees groups of related PRs that need to be merged together or otherwise treated as a single broader unit of change.

- FlakyBot – a tool to automatically detect, take action on, and process results from flaky tests in your CI infrastructure.

- Stacked PRs CLI – a command line tool that helps developers manage cross-PR dependencies. This tool also automates syncing and merging of stacked PRs. Useful when your team wants to promote a culture of smaller, incremental PRs instead of large changes, or when your workflows involve keeping multiple, dependent PRs in sync.