AI-Driven DevSecOps: Building Intelligent CI/CD Pipelines

Discover how AI is revolutionizing DevSecOps. Learn about key AI technologies, best practices, and real-world examples of successful implementations.

The core principles of DevSecOps revolve around automating security processes and integrating security measures into every phase of development, from planning to deployment. Traditional DevSecOps approaches have limitations, where speed and efficiency are sometimes hampered, as manual processes can slow down development cycles. These manual checks are prone to human error and can introduce delays in the CI/CD pipeline, making the overall process less efficient.

Furthermore, traditional security tools can struggle to keep up with the pace of modern development cycles, potentially leaving vulnerabilities undiscovered until it’s too late. This approach to security can result in breaches, compliance issues, and extra costs related to fixing problems after they occur.

This is where AI-driven DevSecOps comes to the rescue. By integrating Artificial Intelligence (AI) into traditional DevSecOps practices, security becomes more proactive and efficient.

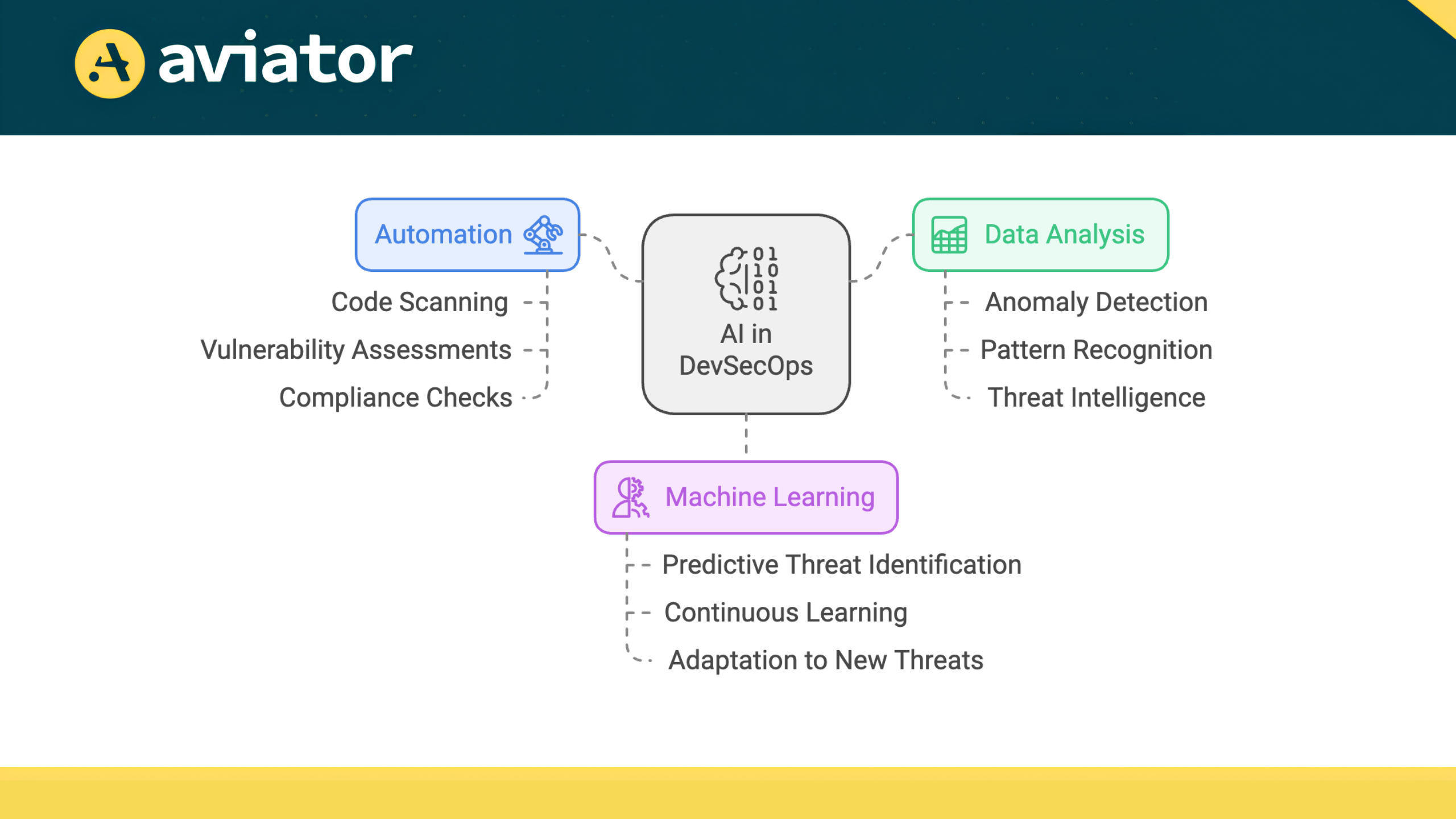

Understanding AI’s Role in DevSecOps

AI can enhances security primarily through automation. Here’s how:

Automation of Repetitive Tasks

- Code Scanning: AI can automate the scanning of code for vulnerabilities, ensuring consistency and accuracy.

- Vulnerability Assessments: Automated assessments help identify potential security weaknesses before they become issues.

- Compliance Checks: AI ensures that security measures are consistently applied across all stages of development.

Early Detection of Vulnerabilities

- Static and Dynamic Code Analysis: AI performs both static and dynamic analysis to identify vulnerabilities early.

- Prompt Addressing: Early detection allows developers to resolve vulnerabilities quickly, reducing the risk of exploitation.

Data Analysis Capabilities

- Processing Large Datasets: AI excels at analyzing vast amounts of data from various sources, such as:

- Code repositories

- Logs

- Threat intelligence feeds

- Identifying Anomalies and Patterns: This capability enables AI to flag similar code patterns that have been exploited in past projects, alerting teams to potential risks before they escalate.

Machine Learning’s Role

- Recognizing Patterns: Machine learning algorithms can be trained on historical data to recognize patterns associated with security incidents.

- Predictive Capability: By learning from past vulnerabilities, these algorithms can identify similar weaknesses in current codebases, allowing teams to focus on critical areas.

- Continuous Improvement: As more data is processed, machine learning models improve their accuracy in identifying threats and vulnerabilities.

This continuous learning process is essential in a rapidly evolving threat environment where new vulnerabilities emerge regularly. As we explore the transformative impact of AI on DevSecOps, it’s essential to recognize how these advancements extend to CI/CD pipelines. In the next section, we will go through the evolution of CI/CD pipelines with AI, examining how it is used to streamline development cycles, improve collaboration, and enable teams to deliver high-quality software more efficiently.

The Evolution of CI/CD Pipelines with AI

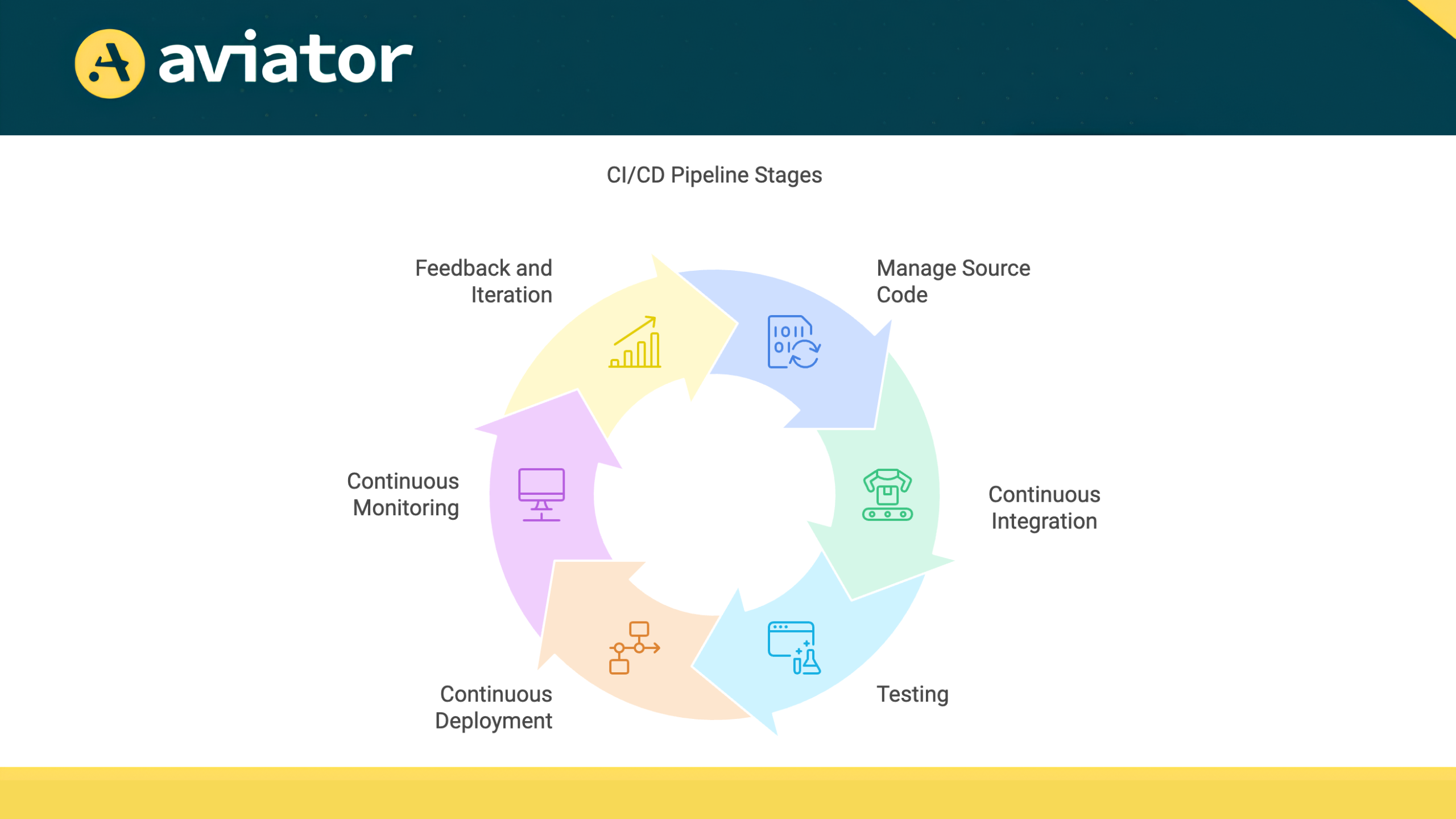

Typical CI/CD Pipeline Architecture

A CI/CD (Continuous Integration and Continuous Deployment) pipeline is designed to automate the software development process, enabling teams to deliver high-quality software efficiently. The architecture of a CI/CD pipeline consists of several key stages, each serving a specific purpose in the software delivery lifecycle.

- Source Code Management: Developers commit code changes to a version control system like Git, ensuring centralized storage and collaboration.

- Continuous Integration (CI): Automated builds are triggered to compile code and run unit tests, catching errors early in the development process.

- Testing: Various tests, including integration and performance tests, verify that the application behaves as expected before deployment.

- Continuous Deployment (CD): The application is deployed to staging or production environments using strategies like blue-green deployments to minimize risks.

- Continuous Monitoring: Tools track application performance and health in real-time, helping teams identify anomalies and respond promptly.

- Feedback and Iteration: User feedback is gathered to inform improvements, ensuring the application evolves based on real-world usage.

Challenges in Traditional CI/CD Pipelines

Despite its advantages, traditional CI/CD pipelines face several challenges and bottlenecks. Manual processes often slow down development cycles, leading to delays in deployment.

For instance, code reviews and testing can become time-consuming, especially when teams are large and the codebase is complex. Additionally, these manual checks are susceptible to human error, which can introduce vulnerabilities or bugs into production.

Traditional security tools may also struggle to keep pace with rapid development, leaving potential security gaps that can be exploited by malicious actors. These issues highlight the need for more efficient and intelligent solutions within CI/CD pipelines.

AI-Driven Optimization of CI/CD Pipelines

AI can help overcome these challenges by optimizing various aspects of the CI/CD pipeline. Here are some ways AI can enhance CI/CD pipelines:

- Automated code testing: By leveraging AI-driven code testing, teams can quickly analyze code changes, generate relevant test cases, and run comprehensive test suites to ensure both functionality and security. For instance, tools like Mabl can automatically create tests based on application behavior and adjust them as the application evolves. If a developer commits a change, Mabl analyzes the code, generates appropriate test scenarios, and executes them, significantly reducing manual testing efforts.

- Intelligent deployment and monitoring: AI can facilitate smarter decision-making based on historical data, predicting the best times to deploy updates and identifying potential risks associated with new code. Tools like Harness.io or Dynatrace can be used to analyze deployment metrics and historical data to determine the best times for deploying updates. For instance, if it detects a sudden drop in response times, it can alert the development team and provide insights into the root cause, allowing for quick remediation before users are impacted.

- Automated code reviews: One of the most impactful applications of AI in CI/CD pipelines is automated code reviews and error detection. For example, if a developer submits a pull request that introduces a vulnerability, tool like DeepSource can flag it immediately, allowing for quick remediation before merging the code. This helps maintain code quality and consistency throughout the development process.

- Error detection and performance optimization: AI can analyze logs, metrics, and user feedback to identify performance bottlenecks and potential errors. New Relic use AI to analyze logs, metrics, and user feedback in real-time. They can predict performance bottlenecks by monitoring application behavior continuously. For instance, if user interactions indicate a slowdown in response times, these tools can alert the development team to investigate before users experience significant delays.

In summary, the integration of AI into CI/CD pipelines represents a significant evolution in how software is developed and deployed. By addressing traditional challenges and bottlenecks, AI enhances automation, improves accuracy, and fosters a more efficient development process. As organizations continue to adopt AI-driven solutions, they can expect to see faster delivery times, improved software quality, and a more robust security posture throughout the development lifecycle. Lets explore how AI-driven DevSecOps enhances security within CI/CD pipelines, ensuring that security measures are seamlessly integrated throughout the software delivery process.

Enhancing Security in CI/CD with AI-Driven DevSecOps

AI-driven DevSecOps can enhances security to identify vulnerabilities and respond to incidents in real-time. Here’s how AI can transform security within CI/CD processes:

Identifying Security Threats in Real-Time

AI models can analyze vast amounts of data generated during the CI/CD process, including code changes, build logs, and deployment metrics. These models continuously monitor the CI/CD pipeline, swiftly identifying security threats as they emerge. For instance, by scrutinizing code commits and build processes, AI can detect unusual patterns that might signal malicious activity, such as unauthorized access attempts or the introduction of vulnerable code. For example, if a developer attempts to commit code that contains known vulnerabilities, AI-equipped tools can automatically flag this change, alerting the team before it is merged into the main branch.

Additionally, AI can assess the risk levels of incoming code changes by comparing them against established security baselines, allowing for a more nuanced understanding of potential threats. This proactive approach enables teams to address potential risks before they escalate into significant security incidents, ultimately reducing the likelihood of costly breaches and enhancing overall software integrity. By integrating AI into the CI/CD pipeline, organizations can not only improve their security posture but also streamline their development processes, fostering a culture of continuous improvement and vigilance.

Automated Incident Response and Remediation

AI can significantly streamline incident response processes. For instance, AI-powered Security Orchestration, Automation, and Response (SOAR), can automatically triage security alerts by analyzing the severity, context, and potential impact of each alert. Based on predefined playbooks and decision trees, SOAR platforms can then initiate appropriate remediation actions, such as isolating affected systems, blocking malicious IP addresses, or triggering further investigation.

If a vulnerability is detected during a build, the system can automatically roll back to a previous stable version, notify the development team, and even initiate a scan to identify the source of the vulnerability. This automation reduces response times from hours or days to minutes, minimizing the window of exposure and the impact of security incidents. By handling routine tasks autonomously, AI-driven SOAR platforms can allow security teams to focus on more strategic tasks, such as threat hunting, risk analysis, and proactive security measures.

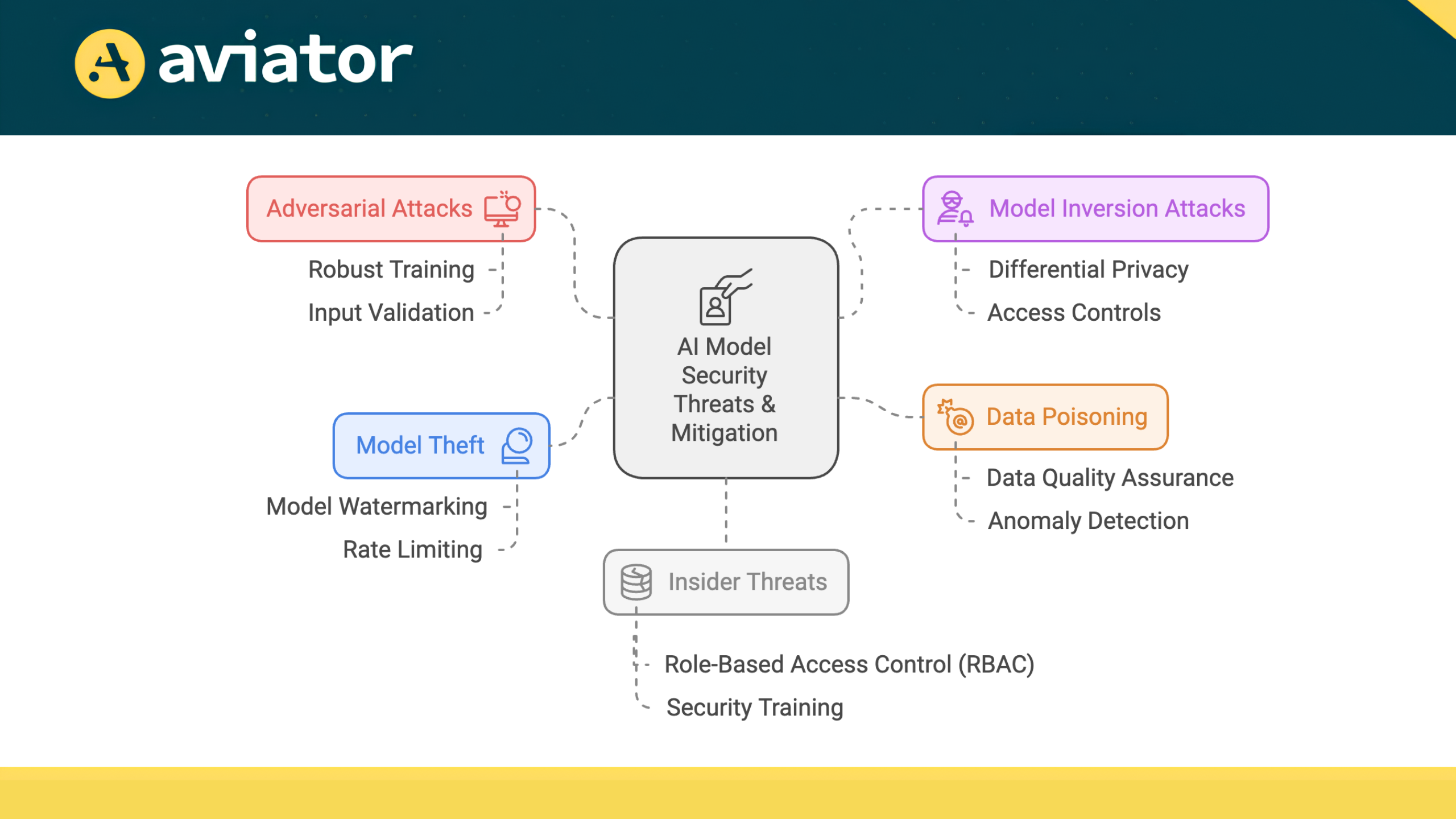

Ensuring the Security of AI Models

While AI can enhances security in CI/CD pipelines, it is essential to ensure the security of the AI models themselves. AI systems can be vulnerable to adversarial attacks, where malicious actors manipulate input data to deceive the model. Here are some common attack vectors and strategies to mitigate them:

- Adversarial Attacks: These attacks involve manipulating input data to deceive AI models into making incorrect predictions or classifications. For example, an attacker might subtly alter images used in a computer vision model, causing it to misidentify objects.

Mitigation Strategies:- Robust Training: Train AI models using adversarial examples to improve their resilience against such attacks. This involves exposing the model to various manipulated inputs during the training phase.

- Input Validation: Implement strict input validation processes to detect and reject anomalous data that could indicate an adversarial attack.

- Model Inversion Attacks: In this scenario, attackers attempt to extract sensitive information from an AI model by querying it with specific inputs. For instance, they might exploit a machine learning model trained on sensitive data to reconstruct private information about individuals.

Mitigation Strategies:- Differential Privacy: Incorporate differential privacy techniques that add noise to the model’s outputs, making it difficult for attackers to infer sensitive information from the model.

- Access Controls: Enforce strict access controls and authentication mechanisms to limit who can query the model and under what conditions.

- Data Poisoning: This attack involves injecting malicious data into the training dataset, which can lead to compromised model performance. For example, if an attacker can manipulate the data used to train a spam detection model, they could cause it to misclassify legitimate emails as spam.

Mitigation Strategies:- Data Quality Assurance: Implement rigorous data validation and cleansing processes to ensure the integrity of the training data. Regular audits of the data sources can help identify and remove potentially malicious entries.

- Anomaly Detection: Use AI-driven anomaly detection systems to monitor training data for unusual patterns that may indicate poisoning attempts.

- Model Theft: Attackers may attempt to replicate or steal AI models through various means, such as querying the model extensively to extract its parameters. This can lead to the unauthorized use of proprietary algorithms.

Mitigation Strategies:- Model Watermarking: Employ techniques to watermark AI models, embedding unique identifiers that can help trace unauthorized copies back to the original source.

- Rate Limiting: Implement rate limiting on model queries to prevent excessive access that could facilitate model theft.

- Insider Threats: Employees or contractors with access to AI models may intentionally or unintentionally compromise security by misusing their access or failing to follow security protocols.

Mitigation Strategies:- Role-Based Access Control (RBAC): Implement RBAC to ensure that individuals only have access to the resources necessary for their roles. Regularly review and update access permissions.

- Security Training: Provide ongoing training for all personnel involved in the development and deployment of AI models, emphasizing the importance of security best practices and awareness of potential insider threats.

By addressing these potential attack vectors with proactive security measures, organizations can significantly enhance the security of AI models within their CI/CD pipelines. This not only protects the integrity of the software development process but also fosters trust in AI-driven solutions.

Conclusion

AI can revolutionize how we build and secure software. It speeds up work by automating tasks, spots threats early, and smooths out development pipelines. With rapid problem-solving capabilities and continuous self-improvement, AI can transform DevSecOps. While AI brings many benefits, we must remember to keep AI systems themselves safe. By using AI wisely, teams can create better, safer software more quickly than ever before. This helps businesses keep up with fast-changing technology and customer needs. The future of DevSecOps is here, and it’s powered by AI, opening up new possibilities for innovation and security in software development.