Mckinsey developer productivity metrics: Opportunity isn’t the goal

Understanding software team performance is essential so business leaders and engineering managers can accurately assess potential optimizations that could improve throughput. However, precise performance measuring remains a challenge for many organizations.

It’s often unclear which metrics should be used, how to analyze them, and whether improving them will actually increase your team’s output.

A recent McKinsey report argues that software teams should focus their benchmarks on opportunities. An opportunity represents the possibility of effecting an improvement in product quality or the efficiency of the development process.

In this article, we’ll analyze this method and how it complements established frameworks like DORA and SPACE.

What is opportunity-driven performance measurement?

Software development is a multi-faceted, collaborative, and iterative discipline that requires continual effort from everyone on the team. Modern software companies rarely have idle time; as soon as one feature sprint begins, the focus immediately moves to the next one.

Therefore, it’s important that the development cycle facilitates gradual improvements over time, to ensure the team can keep making productivity enhancements without being overwhelmed by the burden of existing work.

These pathways to improvement are the “opportunities” described by McKinsey’s methodology. The approach is designed to let organizations analyze whether their teams are able to move forward, without demanding the use of heavy instrumentation.

Assessing whether teams have the opportunity to improve provides several benefits:

- You can determine whether a team is at risk of being overworked: Teams with few opportunities to improve will be too busy to take on additional work or prioritize internal optimizations.

- Understand how teams feel about their work: Fewer opportunities can indicate that teams are stifled in their creative abilities. If all engineering efforts have to go in one direction, then there’s a higher risk of burnout.

- Identify a team’s proficiency, relative to its peers: Teams that are creating more opportunities can be more technically proficient than their peers. They solve solutions in a shorter time, leaving more room to implement improvements in the delivery process.

- Assess whether you have enough overall capacity to innovate: If all your teams are struggling to create opportunities to improve, then it means you’re at the limit of what you can achieve with your current capacity. You might be able to fulfill existing obligations but will be unable to meet any additional pressures (such as rapidly launching new features to respond to competitor announcements).

- Gain assurance that teams are delivering value to the business: Teams that have a very high opportunity score—relative to other teams in your organization—might actually be delivering relatively little business value. It could mean they have too much idle time or are preoccupied with process enhancements, to the detriment of value-creating work.

It’s clear from this list that “opportunities created” is a valid metric for tracking software development performance. But how do you actually measure available opportunities?

Opportunity-driven metrics explained

McKinsey suggests four primary “lenses” to look through:

- Time spent in the inner/outer loop

- Developer Velocity Index benchmark

- Analysis of backlog contributions

- Talent capability score

Let’s explore how these metrics provide improvement opportunities for your organization.

1. Time spent in the inner/outer loop

The software development lifecycle incorporates inner and outer loops. The inner loop is where work gets done; it mainly consists of writing, testing, building, and deploying code. The outer loop encapsulates administrative tasks that can distract and frustrate developers; examples include planning meetings, compliance audits, and collaboration with other teams.

Capturing the time spent in each loop provides information that offers a picture of opportunities. Ideally, developers should be spending the majority of their time in the inner loop, as this is where they’re contributing the most value to the organization.

Teams or individuals that are getting stuck in the outer loop will be spending more time on less productive tasks, limiting your opportunities to progress other work.

2. Developer velocity index benchmark

McKinsey’s existing Developer Velocity Index (DVI) benchmark encapsulates performance across several verticals including tooling, working methods, process optimization, and compliance. Teams or individuals with high DVI scores are equipped to capitalize on more opportunities; they are consistently productive at high rates of throughput, which creates windows between regularly scheduled work in which improvements can be made.

Tools are available to help you calculate DVI. You can also produce your own version by combining performance and activity metrics such as the number of commits made, issues closed, discussions contributed, and features delivered by each individual or team.

Developer satisfaction should be considered too, as frustration or inability to operate autonomously will usually impede velocity.

3. Analysis of backlog contributions

The tasks that a team or individual works on can reveal opportunities to either reassign work or encourage upskilling. Firstly, developers who routinely work on backlog tasks represent an increased opportunity as they’re delivering additional value beyond what may be expected.

If those backlog tasks fall slightly outside the developer’s usual expertise, then successfully tackling them implies there’s a retraining opportunity as the engineer has already demonstrated proficiency with those skills.

The opportunity might not necessarily relate to the specific developer who’s working on the tasks. For example, if developers are routinely undertaking infrastructure tasks—but they’re taking a relatively long time to reach completion—then the opportunity is to introduce more operations staff who are better equipped to deal with those tickets. This then frees up the software team to deliver a higher throughput on their specialist development work.

4. Talent capability score

A talent capability score summarizes the skills and abilities held within a team or organization. It also encapsulates the distribution of skill levels, from beginner through to senior experts.

High-performing organizations will need a breadth of skills across DevSecOps sectors, with a range of skill levels for each one—junior developers are required to ensure fresh talent enters the business, but these must be supported by senior staff. Having too many people with similar proficiency can impede you in the long term, reducing your talent capability score.

Advanced talent capability means more opportunities to deliver throughput and process improvements. Different perspectives will make it more likely that new working methods will be discovered. Similarly, having a good spread of proficiencies allows you to sustain performance long-term by coaching and mentoring newcomers into senior roles.

This progression pathway can make the organization more attractive to developers, increasing staff retention rates and hence producing an additional increase in opportunity.

The challenges of using opportunity metrics for performance measurement

McKinsey’s report has prompted more debate about whether, how, and why software performance should be measured. Looking at the opportunity as a benchmark for performance is a novel idea that encourages a more holistic framing of results while recognizing the iterative, long-term nature of modern software lifecycles.

However, actually collecting and utilizing this data is likely to be challenging for many organizations. Moreover, the metrics don’t necessarily tell the whole story—similarly to DORA and SPACE, you need to analyze them in the context of your organization’s working methods and business aims.

Having a theoretical opportunity to improve isn’t necessarily meaningful, such as if it relates to a low-priority project or you’re already satisfying all performance objectives.

Opportunity isn’t the goal

Perhaps the biggest drawback of focusing on opportunity is that creating opportunities shouldn’t actually be your goal. Ultimately, software teams are always going to be guided by outputs. An opportunity represents a possibility of an outcome, but it doesn’t guarantee you will realize it. To tangibly improve, you must utilize your opportunities to drive improvements across your development lifecycle.

The metrics discussed here won’t directly tell you whether that’s happening. For example, you could hire more proficient developers to optimize your talent capability score. This creates an opportunity to achieve higher throughput—but are you actually capitalizing on that opportunity?

This question must be answered to understand whether continuing the investment is an effective way to further increase throughput. (The answer will also be the primary concern of business and finance teams who want to know whether the new hiring wave has generated financial ROI.)

It can be tempting to assume that the new highly skilled developers will immediately start producing results, but the reality could be very different. If you don’t have the tools and processes to support effective collaboration, then your engineers might actually be sitting idle.

Consequently, it’s imperative that apparent opportunities are approached with caution. You must conduct your own research to determine whether opportunities are being actioned, which could involve consideration of trends shown in outcome-oriented performance data—such as DORA’s average deployment frequency and change failure rate values.

Even so, it may still be difficult to accurately attribute those changes back to your opportunity results. Hence, analyzing opportunities could raise more questions than it solves if you can’t measure whether available opportunities are being converted into actual improvements.

Opportunity means obfuscation

Because opportunity must be seen only as an enabler of outcomes—not a target outcome in itself—it means that this method adds another degree of obfuscation to your performance analysis. It is a helpful way to interpret how performance could change in the future, but it certainly does not mean that it will change in a particular way.

Zeroing in on opportunity while disregarding other benchmarks could therefore distract from more meaningful optimizations. Obfuscating simpler development metrics (such as a team being understaffed, or deployments taking too long to complete) behind obscure “opportunity” scores won’t be helpful to teams already struggling to get a grip on performance.

Opportunity is therefore best-used as an indicator that you’re on the right track. It shouldn’t be used in isolation, but for high-performing teams, it can help you understand whether you’re on course to sustain and improve your current performance. It recognizes the reality that continual reinvestment of time and resources is required to keep improving processes, and hence increase productivity.

In summary, measures such as DORA, SPACE, and your own business metrics can help you understand what’s working and what needs improvement in your software development processes. Opportunity analysis offers an additional dimension that suggests whether you’re equipped to implement those improvements across your processes and products. However, this is a derived value that can obscure important details, so it must always be tied back to your organization’s context.

Conclusion

Software development performance is topical but getting a handle on it is still hard. DORA and SPACE provide measurements for how you’re performing today, but they don’t always reveal whether you’re on a trajectory for future growth.

Assessing the opportunities being created by your teams helps build a more complete picture of long-term performance. Without the opportunity to improve, you can’t pursue the process optimizations, efficiency enhancements, and toolchain revisions that are critical to scaling DevOps teams to match product growth and market demands.

That said, the model still won’t give you a definitive picture of overall productivity. There can be multiple reasons why a metric changes or a trend appears, so it’s vital you interrogate your data to ensure that findings are real and relevant. For this reason, it’s usually best to start off small by collecting a few performance metrics—across DORA, SPACE, and opportunity analysis—that are easily measurable and have a clear impact on your business.

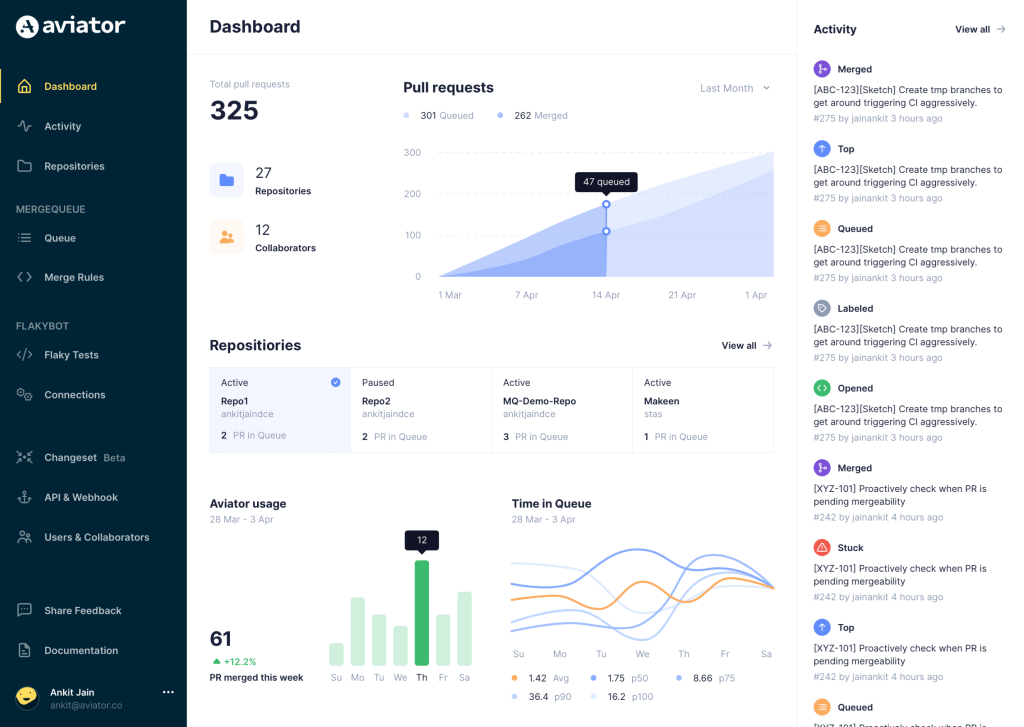

Aviator: Automate your cumbersome processes

Aviator automates tedious developer workflows by managing git Pull Requests (PRs) and continuous integration test (CI) runs to help your team avoid broken builds, streamline cumbersome merge processes, manage cross-PR dependencies, and handle flaky tests while maintaining their security compliance.

There are 4 key components to Aviator:

- MergeQueue – an automated queue that manages the merging workflow for your GitHub repository to help protect important branches from broken builds. The Aviator bot uses GitHub Labels to identify Pull Requests (PRs) that are ready to be merged, validates CI checks, processes semantic conflicts, and merges the PRs automatically.

- ChangeSets – workflows to synchronize validating and merging multiple PRs within the same repository or multiple repositories. Useful when your team often sees groups of related PRs that need to be merged together, or otherwise treated as a single broader unit of change.

- TestDeck – a tool to automatically detect, take action on, and process results from flaky tests in your CI infrastructure.

- Stacked PRs CLI – a command line tool that helps developers manage cross-PR dependencies. This tool also automates syncing and merging of stacked PRs. Useful when your team wants to promote a culture of smaller, incremental PRs instead of large changes, or when your workflows involve keeping multiple, dependent PRs in sync.