Migrating from GitHub Actions to Jenkins

GitHub Actions and Jenkins are popular CI/CD tools that facilitate the implementation of these practices. Integrated with GitHub repositories, GitHub Actions offers a platform to automate workflows directly within the version control environment. Jenkins is a versatile and widely used automation server.

Migrating from GitHub Actions to Jenkins can be driven by various factors. Organizations might choose this migration to capitalize on Jenkins’ advanced features, customizability, and integration capabilities.

GitHub Actions excels in simplicity and native GitHub integration, whereas Jenkins offers more flexibility, making it suitable for enterprises with complex workflows and unique requirements.

Prerequisites

To follow along with this tutorial, you should have the following:

- Understanding of CI/CD

- Jenkins installed locally

- A DockerHub account

Jenkins capabilities over GitHub Actions

Jenkins and GitHub Actions are both CI/CD tools, but they have different capabilities and strengths that make them suitable for different use cases. Here are some of the capabilities that Jenkins offers over GitHub Actions:

- Flexibility and Customization:

Jenkins is known for its unparalleled customization capabilities. You have complete control over defining your CI/CD pipeline with scripted or declarative syntax, making it adaptable to various workflows and requirements. - Extensive Plugin Ecosystem:

Jenkins boasts a vast library of plugins that cover a wide range of functionalities. This allows you to integrate with a plethora of tools, services, and platforms, ensuring your pipeline can be tailored to your specific needs. - Hybrid and On-Premises Deployments:

Jenkins can be deployed on-premises, in private clouds, or in hybrid setups. This is particularly beneficial for organizations that have specific security, regulatory, or infrastructure requirements. - Complex Workflows and Orchestration:

Jenkins excels in managing complex pipelines with conditional branching, parallel stages, and advanced orchestration. This is especially useful for intricate build, test, and deployment processes. - Custom Tools and Platforms:

If your project requires specific tools, platforms, or services that are not natively supported by GitHub Actions, Jenkins allows you to create custom integrations using its extensible architecture. - Advanced Security and Access Control:

Jenkins offers fine-grained access control and security features which are essential for organizations with strict compliance requirements.

Assessment and planning

The project we will be working with is on GitHub along with the Actions pipeline. The pipeline tests, containerizes, and deploys a Python app to DockerHub. Going through the pipeline, you will notice the configurations there have the following sequence:

Trigger:

- Triggers the workflow on a push event.

- Specifies that the workflow should trigger only for pushes to the ‘master’ branch.

Jobs:

- The workflow contains a single job named

build.

Job: Build:

- Runs on the

ubuntu-latestoperating system. - Contains a series of steps that define the tasks to be executed.

Step: Set up Python 3.8:

- Uses the

actions/setup-python@v2action to set up Python 3.8. - Specifies

python-version: 3.8.

Step: Install dependencies:

- Installs and upgrades

pip. - Installs project dependencies listed in

requirements.txt.

Step: Lint tests:

- Installs project dependencies listed in

requirements.txt. - Uses linting tools to check code quality:

- Runs

black --check .for code formatting. - Runs

flake8for code style and formatting checks.

- Runs

Step: Run tests:

- Executes test cases using

pytest.

Step: Build Docker image:

- Builds a Docker image named

khabdrick/test_app:v1using the current directory’s Dockerfile.

Step: Push Docker image to registry:

- Logs in to a Docker registry using provided credentials.

- Pushes the built Docker image

khabdrick/test_app:v1to the registry.

Each step in the build job contributes to the process of building, testing, and preparing the Docker image for deployment. The pipeline is designed to ensure code quality, test coverage, and the successful creation and distribution of a Docker image for the application.

Now that this is identified, we can easily convert it into a pipeline that will run on Jenkins.

Defining Jenkinsfile

A Jenkinsfile is a text file that defines a pipeline for Jenkins, specifying the stages, steps, and configurations required to automate the building, testing, and deployment of software applications. It allows you to define your entire continuous integration and continuous deployment (CI/CD) process as code, which can be versioned and maintained alongside your source code.

Jenkinsfile adopts pipeline as code structure, enabling developers to define and manage their entire continuous integration and continuous deployment (CI/CD) processes using code instead of the GUI.

Understanding Jenkinsfile structure and syntax:

A Jenkinsfile is written in Groovy, a programming language that runs on the Java Virtual Machine (JVM). It follows a structured format to define a pipeline:

- Pipeline Block: The

pipelineblock is the root of the Jenkinsfile and defines the entire pipeline. - Agent Block: The

agentblock specifies where the pipeline should run, whether on a specific Jenkins agent, label, or in a Docker container. - Stages Block: Inside the

stagesblock, you define individual stages of your pipeline. Each stage corresponds to a logical phase of your CI/CD process, like building, testing, and deploying. - Stage Block: Within each

stageblock, you define the steps that should be executed for that stage. A stage can have multiple steps. - Step Block: A

stepblock defines an individual task within a stage. It can include shell commands, script execution, or invoking Jenkins plugins.

Planning stages, steps, and agent configurations (GitHub Actions to Jenkins)

When converting a pipeline from GitHub Actions to Jenkins, you’ll need to map the stages and steps to the Jenkinsfile structure. Here’s a general approach:

- Agent Configuration:

- Review the GitHub Actions workflow’s

runs-onproperty to identify the required agent label or container. - Use the

agentblock in the Jenkinsfile to specify where the pipeline should run.

- Review the GitHub Actions workflow’s

- Stages and Steps:

- Each stage in the GitHub Actions workflow can be represented as a

stageblock in the Jenkinsfile. - The steps within each GitHub Actions job should be translated to steps within the corresponding Jenkins stage.

- Each stage in the GitHub Actions workflow can be represented as a

- Translating Steps:

- Convert shell commands used in GitHub Actions steps to

shsteps in Jenkins. - Use Jenkins plugins for actions that are not supported natively in Jenkins, such as Docker commands or custom tools.

- Convert shell commands used in GitHub Actions steps to

- Credentials:

- Replace GitHub Actions secrets with Jenkins credentials for sensitive data like passwords or tokens.

- Use the

withCredentialsstep to securely access these credentials in your pipeline.

- Notifications and Reporting:

- Jenkins offers various plugins for notifications (e.g., email, Slack) and reporting (e.g., test reports). In this tutorial, we will use email.

- Integrate the email plugins in appropriate stages to maintain similar notifications and reporting as in GitHub Actions.

Migration from GitHub Actions to Jenkins

Translate GitHub Actions workflows to Jenkins pipelines by first creating a new file with the name Jenkinsfile in the home directory and paste the following Groovy script. After that is done you can push to GitHub:

pipeline {

agent any

triggers {

pollSCM('*/5 * * * *') // Polls the SCM every 5 minutes

}

stages {

stage('Install Dependencies') {

steps {

// sh 'apt-get update && apt-get install -y python3 python3-venv'

// Create a Python virtual environment

sh 'python3 -m venv venv'

// Activate the virtual environment

sh '. ./venv/bin/activate && pip install -r requirements.txt && black --check .'

// Upgrade pip within the virtual environment

sh 'pip install --upgrade pip'

// Install dependencies from requirements.txt within the virtual environment

}

}

stage('Lint tests') {

steps {

sh '''

. ./venv/bin/activate &&

flake8 . --count --ignore=W503,E501 --max-line-length=91 --show-source --statistics --exclude=venv &&

black --check --exclude venv .

'''

}

}

stage('Run Tests') {

steps {

sh '. ./venv/bin/activate && pytest'

}

}

stage('Build Docker Image') {

steps {

sh 'docker build -t khabdrick/test_app:v1 .'

}

}

stage('Push Docker Image to Registry') {

steps {

withCredentials([string(credentialsId: 'docker-password', variable: 'DOCKER_PASSWORD')]) {

sh 'docker login -u khabdrick -p $DOCKER_PASSWORD'

sh 'docker push khabdrick/test_app:v1'

}

}

}

}

post {

success {

emailext subject: 'CI/CD Pipeline Notification',

body: 'Your build was successful! ✨ 🍰 ✨',

to: 'youremail@gmail.com',

attachLog: true

}

failure {

emailext subject: 'CI/CD Pipeline Notification. ',

body: 'Your build failed. Please investigate.❌ ❌ ❌ ',

attachLog: true,

to: 'youremail@gmail.com'

}

}

}The pipeline is defined to run on any available agent. It is set to poll the source code management (SCM) for changes every 5 minutes using the **pollSCM** trigger.

Stages:

- Install Dependencies:

- This stage is responsible for setting up the necessary dependencies for the project.

- It creates a Python virtual environment named ‘venv.’

- It activates the virtual environment, installs Python dependencies from ‘requirements.txt,’ and checks code formatting using Black.

- Pip (Python package manager) is upgraded within the virtual environment.

- Lint tests:

- In this stage, code linting is performed using Flake8.

- It checks for code style violations, ignoring specific warnings (W503 and E501).

- Black is used to check code formatting.

- Run Tests:

- This stage activates the virtual environment and runs tests using pytest.

- Build Docker Image:

- This stage builds a Docker image tagged as ‘khabdrick/test_app:v1’ from the project’s source code.

- Push Docker Image to Registry:

- Docker login is performed with credentials retrieved from Jenkins credentials using the

withCredentialsblock. - The Docker image is pushed to a Docker registry with the specified tag.

- Docker login is performed with credentials retrieved from Jenkins credentials using the

Post-build Actions:

- In case of a successful build, an email notification is sent including a success message.

- If the build fails, an email notification is sent including a failure message, prompting further investigation.

- The email notifications are sent to ‘youremail@gmail.com’, and the build logs are attached to the email.

Now log in to your Jenkins GUI and click on the “New Item http://localhost:8080/view/all/newJob” button to create a new project. Give your item a name and select the “Multibranch Pipeline” option, then save.

You will be prompted to add more configurations to the item.

In order for you to work with your project on GitHub you need to save your GitHub username and password on Jenkins. To do this, click the “Branch Sources” tab, click the “Add source” dropdown and select GitHub. You will now see a button to add your GitHub username and password.

Now paste the repository HTTPS URL, then save.

Now you need to give permission to Jenkins to use Docker. You can do this by running the following command:

sudo usermod -a -G docker jenkinsThis modification allows the “jenkins” user to run Docker commands and interact with the Docker daemon without needing to use “sudo” (superuser privileges), making it possible to seamlessly integrate Jenkins with Docker.

Migrating environment variables and secrets

In our GitHub Actions pipeline, we have a Docker hub password that we saved as a Secret. We can do the same to Jenkins.

To add a credential for Docker in Jenkins, you can follow these steps:

- Navigate to “Manage Jenkins”:

- Click on “Manage Jenkins” on the Jenkins dashboard.

- Navigate to “Credentials”:

- In the “Manage Jenkins” page, select “Credentials” from the Security .

- Add a New Credential:

- Click on “(global)” in the “Stores scoped to Jenkins” section.

- Click on “Add Credentials” button on the top right-hand corner.

- Enter Credential Information:

- In the “Kind” dropdown, select “Secret text”.

- For “Secret” enter the Docker registry password.

- Add a Unique Credential ID:

- In the “ID” field, enter a unique identifier for this credential. In your example, we used

'docker-password'as thecredentialsId, so you should use the same ID here. After that click the “Create” button.

- In the “ID” field, enter a unique identifier for this credential. In your example, we used

Sending email notifications

To send email notifications in a Jenkins pipeline, you can use the **emailext** plugin, which extends the built-in email notification capabilities of Jenkins. Here’s how to configure and use it within a Jenkins pipeline:

-

Install the Email Extension Plugin:

If you haven’t already installed the Email Extension Plugin, you can do so by navigating to “Manage Jenkins” > “Plugins” > “Available” tab, and then search for “Email Extension Plugin” and install it.

- Configure Email Settings:

Before using the plugin, configure your email settings in Jenkins:- Go to “Manage Jenkins” > “System.”

- Scroll down to the “Extended E-mail Notification” section.

- Configure the SMTP server details (e.g., SMTP server, SMTP port, and credentials) for your email service provider. you can use sendgrid, Mailchimp, or Amazon SES, etc. In the image below Amazon SES is used.

Testing and verification

You can test by creating a change on your code and pushing it on GitHub. Within five minutes you should receive an email similar to what you see on the image below. this verifies that the pipeline is working as expected.

You can try to alter the test to make sure it fails and you will receive a different response.

Conclusion

In this tutorial, we learned how to develop a Jenkins pipeline from an existing Actions pipeline. We implemented secret management, email notification, and pipeline development in Jenkins. We also learned about the best strategy for migrating from GitHub actions to Jenkins.

Of course, you can get more technical with the pipeline. To test your knowledge of what you have learned in this tutorial, you can take it a step further by researching how you can implement faster pipeline runs with maybe parallelizing stages, caching of dependencies, etc. You could also implement more efficient error handling and recovery strategies.

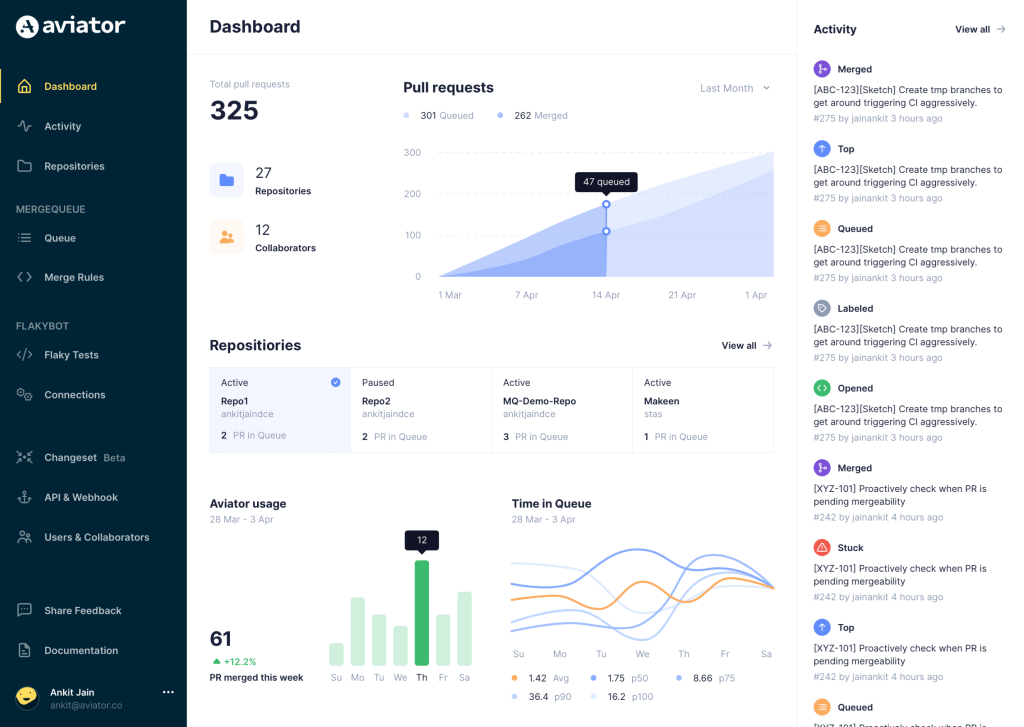

Aviator: Automate your cumbersome processes

Aviator automates tedious developer workflows by managing git Pull Requests (PRs) and continuous integration test (CI) runs to help your team avoid broken builds, streamline cumbersome merge processes, manage cross-PR dependencies, and handle flaky tests while maintaining their security compliance.

There are 4 key components to Aviator:

- MergeQueue – an automated queue that manages the merging workflow for your GitHub repository to help protect important branches from broken builds. The Aviator bot uses GitHub Labels to identify Pull Requests (PRs) that are ready to be merged, validates CI checks, processes semantic conflicts, and merges the PRs automatically.

- ChangeSets – workflows to synchronize validating and merging multiple PRs within the same repository or multiple repositories. Useful when your team often sees groups of related PRs that need to be merged together, or otherwise treated as a single broader unit of change.

- TestDeck – a tool to automatically detect, take action on, and process results from flaky tests in your CI infrastructure.

- Stacked PRs CLI – a command line tool that helps developers manage cross-PR dependencies. This tool also automates syncing and merging of stacked PRs. Useful when your team wants to promote a culture of smaller, incremental PRs instead of large changes, or when your workflows involve keeping multiple, dependent PRs in sync.