Scanning AWS S3 Buckets for Security Vulnerabilities

Learn the common security risks associated with various cloud storage. And see how you can leverage S3Scanner, an open source tool, to scan the vulnerabilities.

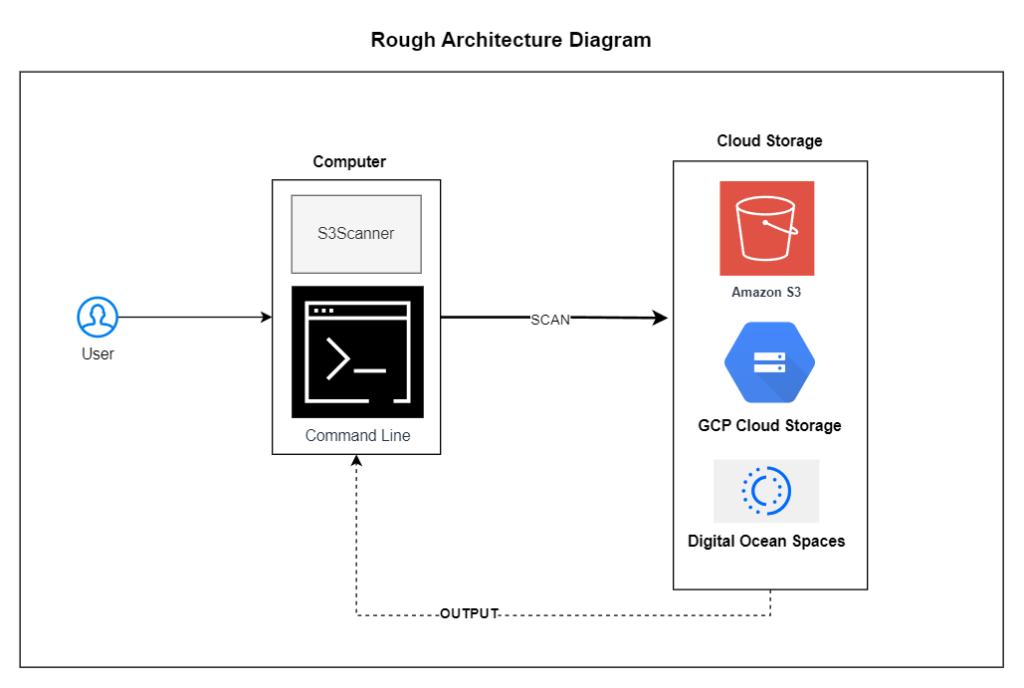

All cloud providers offer some variations of file bucket services. These file bucket services allow users to store and retrieve data in the cloud, offering scalability, durability, and accessibility through web portals and APIs. For instance, AWS offers Amazon Simple Storage Service (S3), GCP offers Google Cloud Storage, and DigitalOcean provides Spaces. However, if unsecured, these file buckets pose a major security risk, potentially leading to data breaches, data leakages, malware distribution, and data tampering. For example, the United Kingdom Council’s data on member’s benefits was exposed by an unsecured AWS bucket. In another incident in 2021, an unsecured bucket belonging to a non-profit cancer organization exposed sensitive images and data for tens of thousands of individuals.

Thankfully, S3Scanner can help. S3Scanner is a free and easy-to-use tool that can help you identify and fix unsecured file buckets in all major cloud providers: Amazon S3, Google Cloud Storage, and Spaces:

In this article, you’ll learn all about S3Scanner and how it can help identify unsecured file buckets on multiple cloud providers.

Common Security Risks in Amazon S3 Buckets

Amazon S3 buckets offer a simple and scalable solution for storing your data in the cloud. However, just like any other online storage platform, there are security risks you need to be aware of.

Following are some of the most common security risks associated with Amazon S3 buckets:

- Unintentional public access: Misconfiguration, such as overly permissive permissions (ie granting public read access), can cause insecure bucket policies and permissions, which can result in unauthorized users being able to access and perform actions on your S3 bucket.

- Insecure bucket policies and permissions: S3 buckets use identity and access management (IAM) to control access to data. This allows you to define permissions for individual users and groups using bucket policies. If your bucket policies are not properly configured, it can give unauthorized users access to your data (eg policies using wildcard). Poorly configured IAM settings can also result in compliance violations due to unauthorized data access or modification, which impacts regulatory requirements and can expose the organization to legal consequences.

- Data exposure and leakage: Even if your S3 bucket isn’t public, data can still be exposed. For instance, data can be exposed if you accidentally share the URL of an object with someone else or if there are overly permissive permissions for that bucket. Additionally, data exposure can occur if you download data from your S3 bucket to an insecure location.

- Lack of encryption: The lack of encryption for data stored in S3 buckets is another significant security risk. Without encryption, intercepted data during transit or compromised storage devices may expose sensitive information.

Managing AWS access control and encryption options can be difficult. For instance, AWS has numerous tools, ranging from intricate access controls to robust encryption options, that help to protect your data and accounts from unauthorized access. Navigating this wide range of tools can be daunting, especially for individuals who don’t have a background in security. A single policy misconfiguration or permission can leave sensitive data exposed to unintended audiences.

This is where S3Scanner could be useful.

What Is S3Scanner

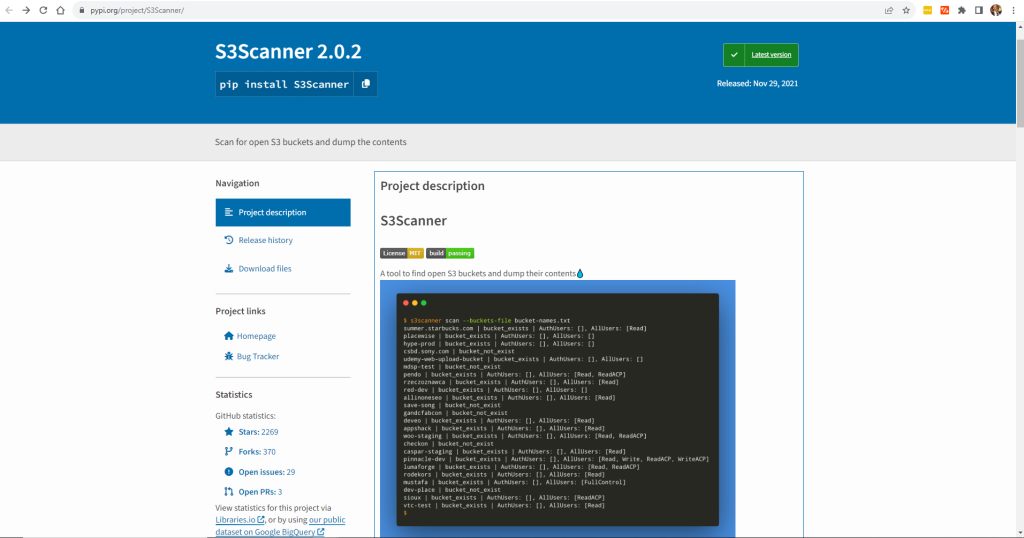

S3Scanner is an open source tool designed for scanning and identifying security vulnerabilities in Amazon S3 buckets:

S3Scanner supports many popular platforms including:

- AWS (the subject platform of this article)

- GCP

- Digital Ocean

- Linode

- Scaleway

You can also use S3Scanner with custom providers such as your own bespoke bucket solution. This makes it a versatile solution for various organizations.

Please note, for non AWS services, S3Scanner currently only supports scanning for anonymous user permissions.

The following command shows S3Scanner basic usage to scan for buckets listed in a file called names.txt and enumerate the objects.

$ s3scanner -bucket-file names.txt -enumerateThe following are some of S3Scanner’s key features:

Multithreaded Scanning

S3Scanner uses multithreading capabilities to concurrently assess multiple S3 buckets, optimizing the speed of vulnerability detection. To specify the number of threads to use, you can use the -threads flag and then provide the number of threads you want to use.

For instance, if you want to use ten threads, you’ll use the following command:

s3scanner -bucket my_bucket -threads 10Config File

If you’re using flags that require config options like custom providers, you’ll need to create a config file. To do so, create a file named config.yml and put it in one of the following locations where S3Scanner will look for it:

(current directory)

/etc/s3scanner/

$HOME/.s3scanner/Built-In and Custom Storage Provider Support

As previously stated, S3Scanner seamlessly integrates with various providers. You can use the -provider option to specify the object storage provider when checking buckets.

For instance, if you use GCP, you’d use the following command:s3scanner -bucket my_bucket -provider gcp

To use a custom provider when working with currently unsupported or a local network storage provider, the provider value should be custom like this:s3scanner -bucket my_bucket -provider custom

Please note that when you’re working with a custom provider, you also need to set up config file keys under providers.custom, as listed in the config file. Some examples include address_style, endpoint_format, and insecure. Here’s an example of a custom provider config:

# providers.custom required by `-provider custom`

# address_style - Addressing style used by endpoints.

# type: string

# values: "path" or "vhost"

# endpoint_format - Format of endpoint URLs. Should contain '$REGION' as placeholder for region name

# type: string

# insecure - Ignore SSL errors

# type: boolean

# regions must contain at least one option

providers:

custom:

address_style: "path"

endpoint_format: "https://$REGION.vultrobjects.com"

insecure: false

regions:

- "ewr1"Comprehensive Permission Analysis

S3Scanner provides access scans by examining bucket permissions. It identifies misconfigurations in access controls, bucket policies, and permissions associated with each S3 bucket.

PostgreSQL Database Integration

S3Scanner can save scan results directly to a PostgreSQL database. This helps maintain a structured and easily accessible repository of vulnerabilities. Storing results in a database also enhances your ability to track historical data and trends.

To save all scan results to a PostgreSQL, you can use the -db flag, like this:s3 scanner -bucket my_bucket -db

This option requires the db.uri config file key in the config file. This is what your config file should look like:

# Required by -db

db:

uri: "postgresql://user:password@db.host.name:5432/schema_name"RabbitMQ Connection for Automation

You can also integrate with RabbitMQ, which is an open source message broker for automation purposes. This allows you to set up automated workflows triggered by scan results or schedule them for regular execution. Automated responses can include alerts, notifications, or further actions based on the identified vulnerabilities, ensuring proactive and continuous security.

The -mq flag is used to connect to a RabbitMQ server, and it consumes messages that contain the bucket names to scan:s3scanner -mq

The -mq flag requires mq.queue_name and mq.uri keys to be set up in the config file.

Customizable Reporting

With S3Scanner, you can generate reports tailored to your specific requirements. This flexibility ensures that you can communicate findings effectively and present information in a format that aligns with your organization’s reporting standards.

For instance, you can use the -json flag to output the scan results in JSON format:s3scanner -bucket my-bucket -json

Once the output is in JSON, you can pipe it to jq, a command-line JSON processor, or other tools that accept JSON, and format the fields as needed.

How S3Scanner Works

To use S3Scanner, you need to install it on your system. The tool is available on GitHub, and the installation instructions vary based on your platform. Currently, supported platforms include Windows, Mac, Kali Linux, and Docker.

The installation steps for the various platforms and version numbers are shown below:

| Platform | Version | Steps |

|---|---|---|

| Homebrew (MacOS) | v3.0.4 | brew install s3scanner |

| Kali Linux | 3.0.0 | apt install s3scanner |

| Parrot OS | – | apt install s3scanner |

| BlackArch | 464.fd24ab1 | pacman -S s3scanner |

| Docker | v3.0.4 | docker run ghcr.io/sa7mon/s3scanner |

| Winget (Windows) | v3.0.4 | winget install s3scanner |

| Go | v3.0.4 | go install -v github.com/sa7mon/s3scanner@latest |

| Other (build from source) | v3.0.4 | git clone git@github.com:sa7mon/S3Scanner.git && cd S3Scanner && go build -o s3scanner . |

For instance, on a Windows system, you would use winget and run the following command: winget install s3scanner. Your output would look like this:

Found S3Scanner [sa7mon.S3Scanner] Version 3.0.4

This application is licensed to you by its owner.

Microsoft is not responsible for, nor does it grant any licenses to, third-party packages.

Downloading https://github.com/sa7mon/S3Scanner/releases/download/v3.0.4/S3Scanner_Windows_x86_64.zip

██████████████████████████████ 6.52 MB / 6.52 MB

Successfully verified installer hash

Extracting archive...

Successfully extracted archive

Starting package install...

Command line alias added: "S3Scanner"

Successfully installedThe last sentence shows that S3Scanner was successfully installed.

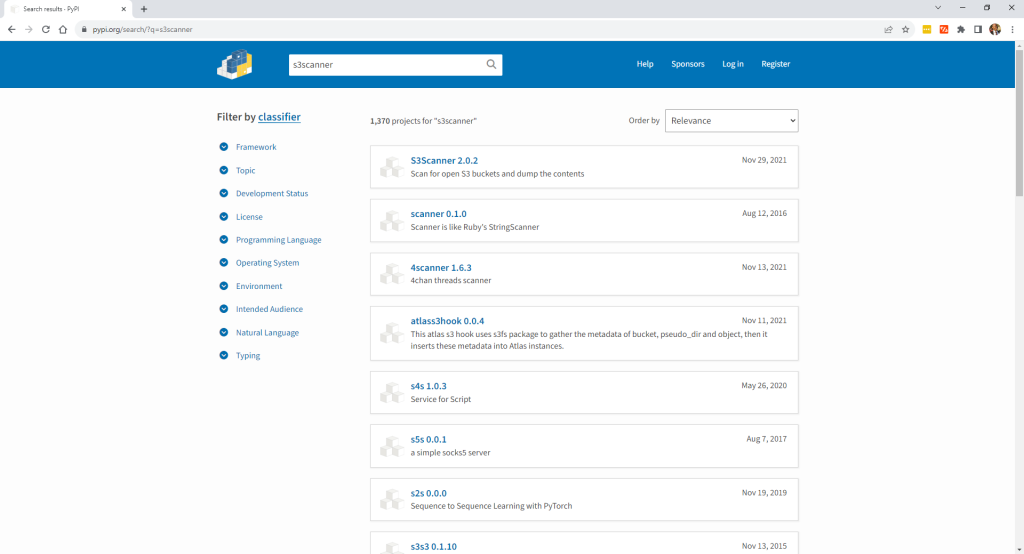

If you want to avoid installing S3Scanner via the above methods, you can also use the Python Package Index (PyPI). To do so, search for S3Scanner on PyPI:

And select the first option that appears (ie S3Scanner):

Create and navigate to a directory of your choosing (eg s3scanner_directory) and run the command pip install S3Scanner to install it.

Please note that you need to have Python and pip installed on your computer to be able to run the

pipcommand.

Your output looks like this:

Collecting S3Scanner

Downloading S3Scanner-2.0.2-py3-none-any.whl (15 kB)

Requirement already satisfied: boto3>=1.20 in c:\python\python39\lib\site-packages (from S3Scanner) (1.34.2)

Requirement already satisfied: botocore<1.35.0,>=1.34.2 in c:\python\python39\lib\site-packages (from boto3>=1.20->S3Scanner) (1.34.2)

Requirement already satisfied: jmespath<2.0.0,>=0.7.1 in c:\python\python39\lib\site-packages (from boto3>=1.20->S3Scanner) (1.0.1)

Requirement already satisfied: s3transfer<0.10.0,>=0.9.0 in c:\python\python39\lib\site-packages (from boto3>=1.20->S3Scanner) (0.9.0)

Requirement already satisfied: python-dateutil<3.0.0,>=2.1 in c:\python\python39\lib\site-packages (from botocore<1.35.0,>=1.34.2->boto3>=1.20->S3Scanner) (2.8.2)

Requirement already satisfied: urllib3<1.27,>=1.25.4 in c:\python\python39\lib\site-packages (from botocore<1.35.0,>=1.34.2->boto3>=1.20->S3Scanner) (1.26.18)

Requirement already satisfied: six>=1.5 in c:\python\python39\lib\site-packages (from python-dateutil<3.0.0,>=2.1->botocore<1.35.0,>=1.34.2->boto3>=1.20->S3Scanner) (1.16.0)

Installing collected packages: S3Scanner

Successfully installed S3Scanner-2.0.2This confirms that the S3Scanner was successfully installed.

Configure Scanning Parameters

Before running any scans, you need to make sure everything is working and configure your scanning parameters.

Run one of the following command to make sure S3Scanner is configured correctly:

s3scanner -hs3scanner --helpYou should receive some information about the various options you can use when scanning buckets:

usage: s3scanner [-h] [--version] [--threads n] [--endpoint-url ENDPOINT_URL]

[--endpoint-address-style {path,vhost}] [--insecure]

{scan,dump} ...

s3scanner: Audit unsecured S3 buckets

by Dan Salmon - github.com/sa7mon, @bltjetpack

optional arguments:

-h, --help show this help message and exit

--version Display the current version of this tool

--threads n, -t n Number of threads to use. Default: 4

--endpoint-url ENDPOINT_URL, -u ENDPOINT_URL

URL of S3-compliant API. Default: https://s3.amazonaws.com

--endpoint-address-style {path,vhost}, -s {path,vhost}

Address style to use for the endpoint. Default: path

--insecure, -i Do not verify SSL

mode:

{scan,dump} (Must choose one)

scan Scan bucket permissions

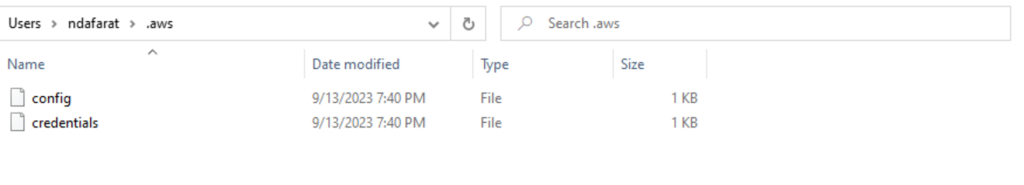

dump Dump the contents of bucketsIf you have the AWS Command Line Interface (AWS CLI) installed and have AWS credentials specified in the .aws folder, S3Scanner will pick up these credentials for use when scanning. Otherwise, you have to install the AWS CLI to be able to pick buckets in your environment:

Run Scans and Interpret Results

To run a scan, you need to run s3scanner and provide the flags, such as scan or dump, and the name of the bucket. For example, to scan for permissions on a bucket called my-bucket, you would run s3scanner scan --bucket my-bucket.

This gives you a similar output to the following (the columns are delimited by the pipe character, |):

my-bucket | bucket_exists | AuthUsers: [], AllUsers: []The first portion of the output gives you the name of the bucket, and it tells you if that bucket exists in the S3 universe. The last portion of the output shows you the permissions attributable to authenticated users (anyone with an AWS account) as well as all users.

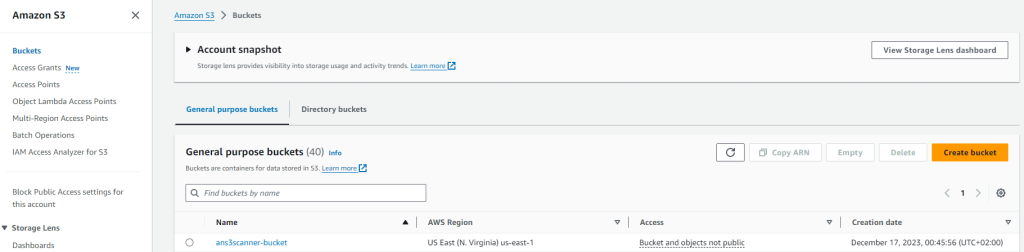

Run a scan command for a bucket that is in your AWS environment, such as ans3scanner-bucket, like this:

You should get the following output:

ans3scanner-bucket | bucket_exists | AuthUsers: [Read], AllUsers: []This output shows that the bucket has authenticated users granted [Read] rights.

Scan Your GCP Buckets

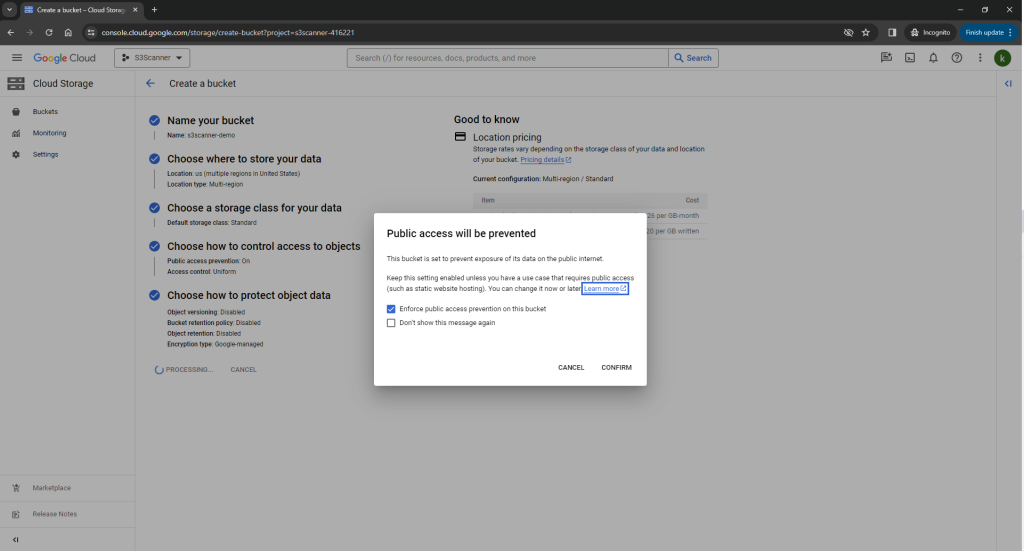

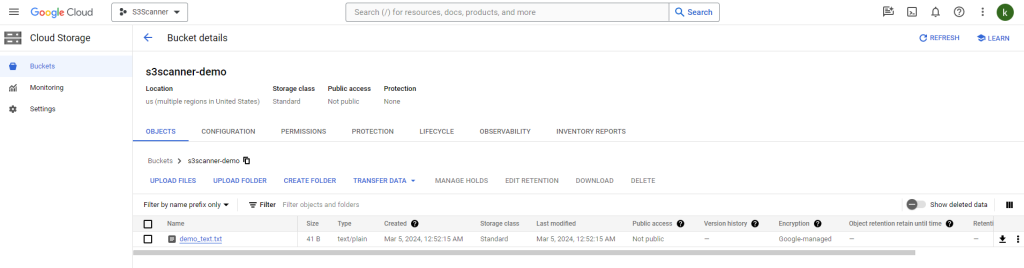

To test your GCP buckets, create a bucket in your GCP account and make sure it doesn’t have public access:

Inside the bucket, add a text file:

To scan the bucket, run the previously mentioned command: s3scanner -bucket s3scanner-demo -provider gcp. You have to provide the -provider gcp flag to tell S3Scanner that you want to scan a GCP bucket. If you don’t provide this flag, S3Scanner uses AWS (the default option).

Your output shows that a bucket exists:

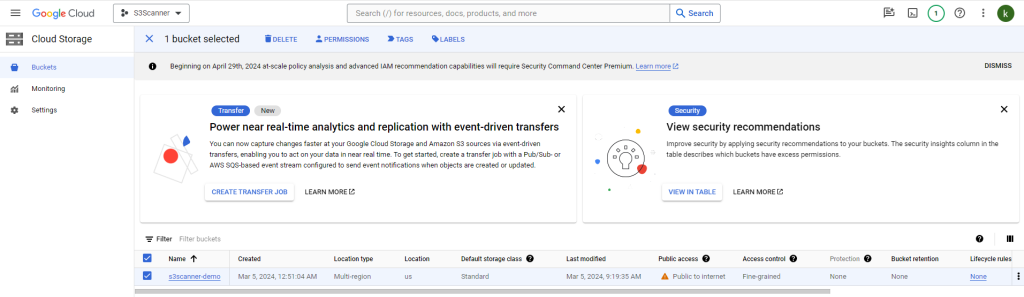

level=info msg="exists | s3scanner-demo | default | AuthUsers: [] | AllUsers: []"Now, change the GCP bucket access to “public” and grant all users access:

Then, scan the GCP bucket. Your output will show that the bucket is available to all users:

level=info msg="exists | s3scanner-demo | default | AuthUsers: [] | AllUsers: [READ, READ_ACP]"Best Practices for Remediation

After you review the results of your scan, make sure to prioritize the identified issues based on their severity. Some common remediations are as follows:

- Adjust bucket permissions: You can restrict access to buckets by adjusting permissions and policies to adhere to the principle of least privilege. Make sure to remove unnecessary public access and ensure that only authorized entities have the required permissions.

- Regularly audit and monitor your S3 bucket configurations: Establish a routine for auditing and monitoring your S3 bucket configurations. You can also set up alerts for any changes to permissions or policies, enabling timely detection and response to potential security incidents. Additionally, you can utilize tools and services such as AWS Config, which helps you assess, audit, and evaluate the configuration of your resources. Moreover, AWS Trusted Advisor helps inspect your environment and provides recommendations to improve security, performance, and cost.

- Encrypt data: Securing data through encryption involves implementing measures for both in transit and at rest. For data that is in transit, employing secure communication channels like HTTPS during transfer ensures that information remains encrypted between clients and servers. On the server side, AWS S3 offers different options for encrypting data at rest.

Conclusion

In this article, you learned about some of the common security risks associated with Amazon S3 buckets and how S3Scanner can help.

S3Scanner is a valuable tool for anyone leveraging cloud storage through buckets because it helps you scan for vulnerabilities in your environment. With multithreaded scanning, comprehensive permission analysis, custom storage provider support, PostgreSQL database integration, and customizable reporting, S3Scanner is definitely worth exploring.