What Causes Flaky Tests and How to Manage Them

This post reviews five common causes of flaky tests showing up in your build pipeline and how best to manage them.

TL;DR

- Flaky tests fail intermittently without code changes.

- They reduce developer trust and slow CI/CD pipelines.

- Common causes: shared state, infrastructure issues, third-party APIs, poor test design.

- Fix by isolating tests, mocking dependencies, improving test setup, and reducing UI tests.

- You can’t eliminate flakiness completely, but you can minimize it.

- Tools like Aviator’s TestDeck help detect and manage flaky tests.

Flaky tests are non-deterministic tests in your test suite. They may be intermittently passing or failing, making test results unreliable. If that sounds bad to you, you’re right. Here’s why:

- Developer productivity decreases as test results become inaccurate and trust in the test suite plummets

- Multiple, unrelated commits cause similar errors, making maintenance difficult

- Legitimate issues may get ignored due to a high number of false positives

- Repetitive work is required to determine if bugs exist at all

- Diagnostics time increases as errors can be in the test or code

- User dissatisfaction increases as bugs find their way into production

Let’s look at five common causes for flaky tests showing up in your build pipeline and how you can manage them. Additional strategies can be found here.

Concurrency is one of the common reasons why tests do not find bugs. Occasionally, developers make incorrect assumptions about the ordering of operations between threads. One test thread might be assuming a state for shared resources like data or memory.

For example, test 2 might assume test 1 passes and use test 1’s output as an input for itself. Or test 2 might assume that test 1 leaves a data variable in state x, but test 1 may not always do that – causing test 2 to fail. Tests can also be flaky if they do not correctly acquire and release shared resources between them.

How to Manage

- Use synchronization blocks between tests

- Change the test to accept a wider range of behaviors

- Remove dependencies between tests

- Explicitly set static variables to their default value

- Use resource pools – your tests can acquire and return resources to the pool

Unreliable Third-Party APIs

It should come as no surprise that relying on third-party results in less control of your test environment. Less control of your test environment increases the chances of test unpredictability.

Flaky tests can occur when your test suite is dependent on unreliable third-party APIs or functionality maintained by another team. These tests may intermittently fail due to third-party system errors, unreliable network connections, or third-party contract changes.

How to Cope with a Janky Third-Party API

- Use test stubs or test doubles to replace the third-party dependency. Your regular tests can talk to the double instead of the external source

- Test doubles will not detect API contract changes. For this, you will need to develop a separate suite of integration contract tests

- Contract tests can be run separately and need not break the build the same as other tests. They can be run less frequently and be actioned independently of other bugs

- Communicate with the third-party provider to discuss the impact of changes made by them on your system

Infrastructure Issues

Test infrastructure failure (network outages, database issues, continuous integration node failure, etc.) is one of the most common causes of flaky tests.

How to Manage

- These issues are typically easier to spot than others. Your debugging process can check these first before attempting to find other causes

- Write fewer end-to-end tests and more unit tests

- Run tests on real devices instead of emulators or simulators

Flaky UI Tests

UI tests are used to test visual logic, browser compatibility, animation, and so on. Since they start at the browser level, they can be very flaky due to a variety of reasons – from missing HTML elements and cookie changes to actual system issues.

If you think of your test suite as a pyramid, UI tests are at the top. They should only occupy a small portion of your test portfolio because they are brittle, expensive to maintain, and time-consuming to run.

How to Fix Flaky Tests

- Don’t use UI tests to test back-end logic

- Capture the network layer using Chrome DevTools Protocol (CDP). CDP allows for tools to inspect, debug, and profile Chromium, Chrome, and other Blink-based browsers

Poor Test Writing

Not following good test writing practices can result in a large number of flaky tests in your pipeline.

Some common mistakes include:

- Not adopting a testing framework from the start

- Caching data. Over time, cached data may become stale affecting test results

- Using random number generators without accounting for the full range of possibilities

- Using floating-point operations without paying attention to underflows and overflows

- Making assumptions about the order of elements in an unordered collection

- Using sleep statements to make your test wait for a state change. Sleep statements are imprecise and one of the biggest causes of flaky tests. It is better to replace them with the waitFor() function

How to Manage Flaky Tests

- Treat test automation flakiness like any other software development effort. Make testing a shared responsibility between developers and analysts

- Use tools to monitor test flakiness. If the flakiness is too high, the tool can quarantine the test, (removing it from the critical path) and help resolve issues faster

- Start all tests in a known state

- Avoid hardcoding test data

Is There a Way to Eliminate Flaky Tests Completely?

The unfortunate answer is, no, there is no silver bullet that entirely eliminates flakiness. Even high-performing teams like Google have reported at least some flakiness in 16% of their test suite. The best way to deal with the issue is by monitoring test health and having both short-term and long-term mitigation strategies in place.

If flaky tests are a severe problem for your team, or if this is a general topic of interest, check out Aviator TestDeck, a tool to help manage flaky test infrastructure better.

FAQs

What Are Flaky Tests in CI/CD?

Flaky tests are automated tests that sometimes pass and sometimes fail, even when the code hasn’t changed. They are a major pain point in CI/CD because they create confusion, reduce developer confidence, and slow down release cycles by causing false alarms.

How Do I Fix Intermittent Test Failures?

Start by consistently reproducing the failure. Look for issues like race conditions, timing delays, unmocked external services, or leftover state from other tests. Fixing typically involves isolating the test environment, mocking unreliable dependencies, and adding retries or wait conditions where needed.

Why Are Automated Tests Flaky?

Flakiness often comes from non-deterministic behavior—like tests depending on timing, asynchronous code, network responses, or shared data. Poor test design or improper cleanup between test runs also contributes, especially in UI or integration tests.

What Tools Detect Flaky Tests?

Tools like Buildkite Test Analytics, CircleCI Insights, FlakyTestHunter (for JUnit), TestGrid, and Gradle’s flaky test detection can automatically detect test instability over time by tracking inconsistent outcomes across multiple runs.

Should You Delete a Flaky Test?

Not right away. Try to fix the root cause, as flaky tests often uncover real but hard-to-reproduce bugs. If the test provides little value, and repeated fixes have failed, it may be better to delete or rewrite it to avoid blocking your CI/CD pipeline.

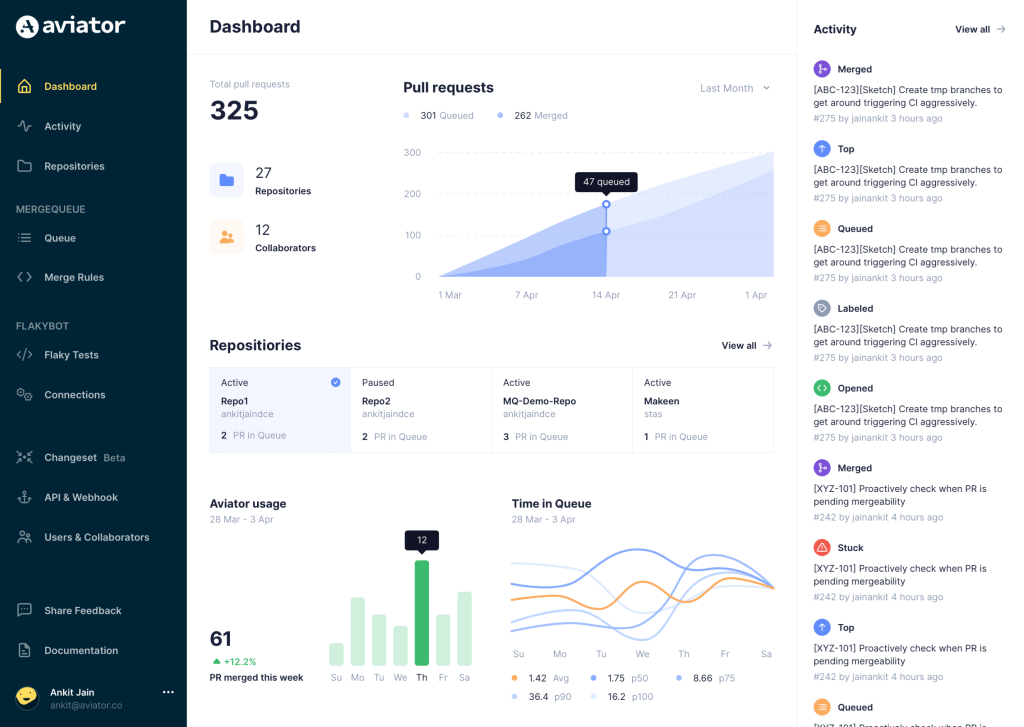

Aviator: Automate Your Cumbersome Processes

Aviator automates tedious developer workflows by managing git Pull Requests (PRs) and continuous integration test (CI) runs to help your team avoid broken builds, streamline cumbersome merge processes, manage cross-PR dependencies, and handle flaky tests while maintaining their security compliance.

There are 4 key components to Aviator:

- MergeQueue – an automated queue that manages the merging workflow for your GitHub repository to help protect important branches from broken builds. The Aviator bot uses GitHub Labels to identify Pull Requests (PRs) that are ready to be merged, validates CI checks, processes semantic conflicts, and merges the PRs automatically.

- ChangeSets – workflows to synchronize validating and merging multiple PRs within the same repository or multiple repositories. Useful when your team often sees groups of related PRs that need to be merged together, or otherwise treated as a single broader unit of change.

- TestDeck – a tool to automatically detect, take action on, and process results from flaky tests in your CI infrastructure.

- Stacked PRs CLI – a command line tool that helps developers manage cross-PR dependencies. This tool also automates syncing and merging of stacked PRs. Useful when your team wants to promote a culture of smaller, incremental PRs instead of large changes, or when your workflows involve keeping multiple, dependent PRs in sync.